Access and Port Requirements for Installation

Addresses to Access for Installations

archive.ubuntu.com / cdn.redhat.com

*.docker.com

*.docker.io

*.k8s.io

*.docker.pkg.dev

*.amazonaws.com (for the reason of this access, please refer to documentation of Kubernetes)

*.mongodb.org

artifacts.elastic.co

These port accesses have been checked and approved for kubernetes version 1.31.0 and flannel version 0.26.1, ports may vary in different kubernetes and flannel versions.

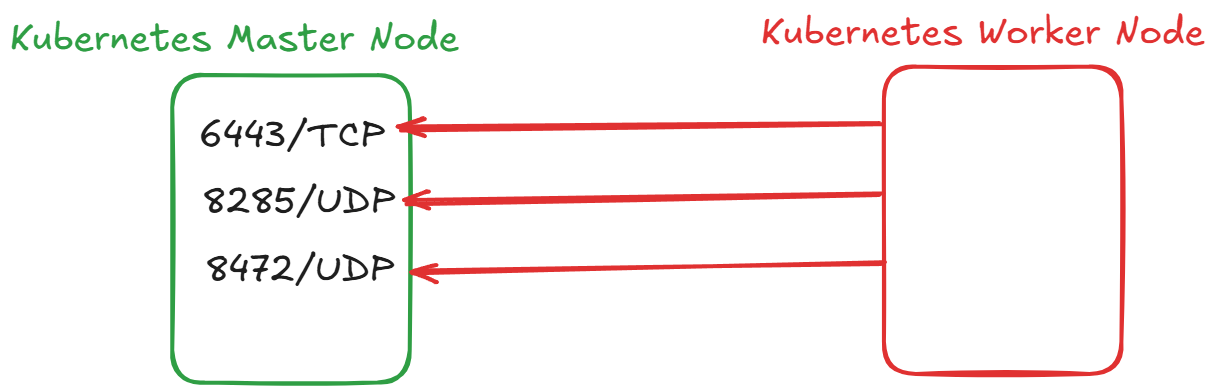

Port Access from Kubernetes Worker Servers to Master Servers

The following network diagram example explains which ports need to be configured in a Kubernetes cluster to enable worker nodes to access master nodes. Certain ports need to be opened to ensure healthy communication between both public and private network zones within the Kubernetes cluster.

The ports indicated in the diagram are required for Kubernetes Worker Nodes to communicate seamlessly with Master Nodes. These ports must be open from all Worker Nodes to Master Nodes for the cluster to function correctly.

Note: All default ports can be changed, but in this case operations and permissions must be done carefully.

Port Requirements of Kubernetes Master Servers

Port permissions and descriptions to be given to Worker servers for Master/Control Plane servers:

| Port | Description |

|---|---|

| 6443/tcp | Kubernetes API server |

| 8285/udp | Flannel |

| 8472/udp | Flannel |

Port Access from Kubernetes Master Servers to Worker Servers

The connections from Kubernetes Master/Control Plane servers to Worker servers are required for communication between the cluster components of Kubernetes. These ports must be open from all Master Nodes to Worker Nodes for the cluster to function correctly.

Port Requirements of Kubernetes Worker Servers

Port permissions and descriptions for Worker servers to be given to Master/Control Plane servers:

| Port | Description |

|---|---|

| 10250/tcp | Kubelet API |

| 8285/udp | Flannel |

| 8472/udp | Flannel |

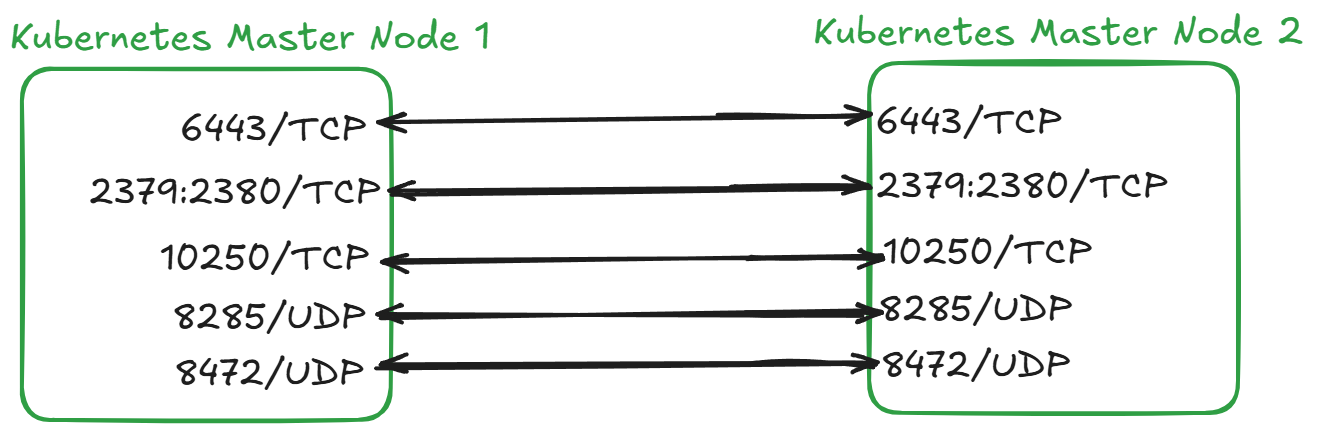

Port Access between Kubernetes Master Servers

Kubernetes Master inter-server connections are required for communication between the cluster components of Kubernetes. These ports must be open between all Master Nodes for the cluster to function correctly.

Port permissions to be granted between master servers:

| Port | Description |

|---|---|

| 6443/tcp | Kubernetes API server |

| 2379:2380/tcp | Etcd server client API |

| 10250/tcp | Kubelet API |

| 8472/udp | Flannel |

| 8285/udp | Flannel |

Note: If applications such as Ingress Controller, Metric Server, Rancher, Lens etc. will be used or Kubernetes will be installed in the cloud environment, port requirements should be checked externally.

Kubernetes High Availability (High Access) Cluster Setup.

On Kubernetes Master/Control Plane and Worker servers, the necessary permissions must be defined so that the VIP of the Load Balancer can be accessed via port 6443. This VIP is directed to the 6443 ports of the Master nodes and load balancing is performed.

Port Requirements of Apinizer Components

Kubernetes uses ports in the range 30000-32767 by default for external access. Although certain default ports are used for Apinizer, they can be customized on demand.

For Worker servers:

| Port | Description |

|---|---|

| 32080 | Default NodePort for accessing the Api Management Console. |

| 30180 | Default NodePort for Api Portal access. |

| 30080 | Default NodePort for Apinizer API Gateway access. Different ports can be used if more than one environment is to be installed. |

For Mongodb servers:

| Port | Description |

|---|---|

| 25080 | Apinizer configuration database port. |

For Elasticsearch servers:

| Port | Description |

|---|---|

| 9200 | API Application port of the Analytics server where traffic logs are written. |

Request Template for Port Access Permissions

Port Access Requests for Kubernetes Master and Worker Servers:

SOURCE:

<KUBERNETES_WORKER_IP_ADDRESSES>

DESTINATION:

<KUBERNETES_CONTROL_PLANE_IP_ADDRESSES>

6443/tcp

8285/udp

8472/udp

---

SOURCE:

<KUBERNETES_WORKER_IP_ADDRESSES>

DESTINATION:

<MONGODB_IP_ADDRESSES>

25080/tcp

---

SOURCE:

<KUBERNETES_WORKER_IP_ADDRESSES>

DESTINATION:

<ELASTICSEARCH_IP_ADDRESSES>

9200/tcp

---

---

SOURCE:

<KUBERNETES_CONTROL_PLANE_IP_ADDRESSES>

DESTINATION:

<KUBERNETES_WORKER_IP_ADDRESSES>

10250/tcp

8285/udp

8472/udp

---

# If there is no free access between Kubernetes Control Plane servers

SOURCE:

<KUBERNETES_CONTROL_PLANE_IP_ADDRESSES>

DESTINATION:

<KUBERNETES_CONTROL_PLANE_IP_ADDRESSES>

6443/tcp

2379:2380/tcp

8472/udp

8285/udpKubernetes High Availability (High Access) Cluster Setup. Load Balancer Access and Routing Requests

SOURCE (Only Kubernetes Cluster access to this address is required, there will be no external access):

<KUBERNETES_CONTROL_PLANE_IP_ADDRESSES>

<KUBERNETES_WORKER_IP_ADDRESSES>

DESTINATION:

<VIRTUAL_IP>

6443/tcp

---

ROUTING:

<KUBERNETES_CONTROL_PLANE_IP_ADDRESSES>

tcp/6443

---

If desired, a dns can also be defined to this routing virtual ip.

SSL will be created and communicated through one of the servers during the setup phase.

SSL Check will not be performed on the routing system.

This ssl will be renewed every year via kubernetes. For reference, this setting is done in HAProxy LoadBalancer as follows:

frontend kubernetes-cluster

bind *:6443

mode tcp

option tcplog

default_backend kubernetes-master

backend kubernetes-master

option httpchk GET /healthz

http-check expect status 200

mode tcp

option ssl-hello-chk

balance roundrobin

server <master1hostname> <MASTER_1_IP>:6443 check fall 3 rise 2

server <master2hostname> <MASTER_2_IP>:6443 check fall 3 rise 2

server <master3hostname> <MASTER_3_IP>:6443 check fall 3 rise 2