Anomaly Detection on Failed Request Rate Increase: Examining Different Scenarios

In this scenario, 3 different situations will be analyzed. What they all have in common is that the number of erroneous requests will increase by a certain amount compared to the last check.

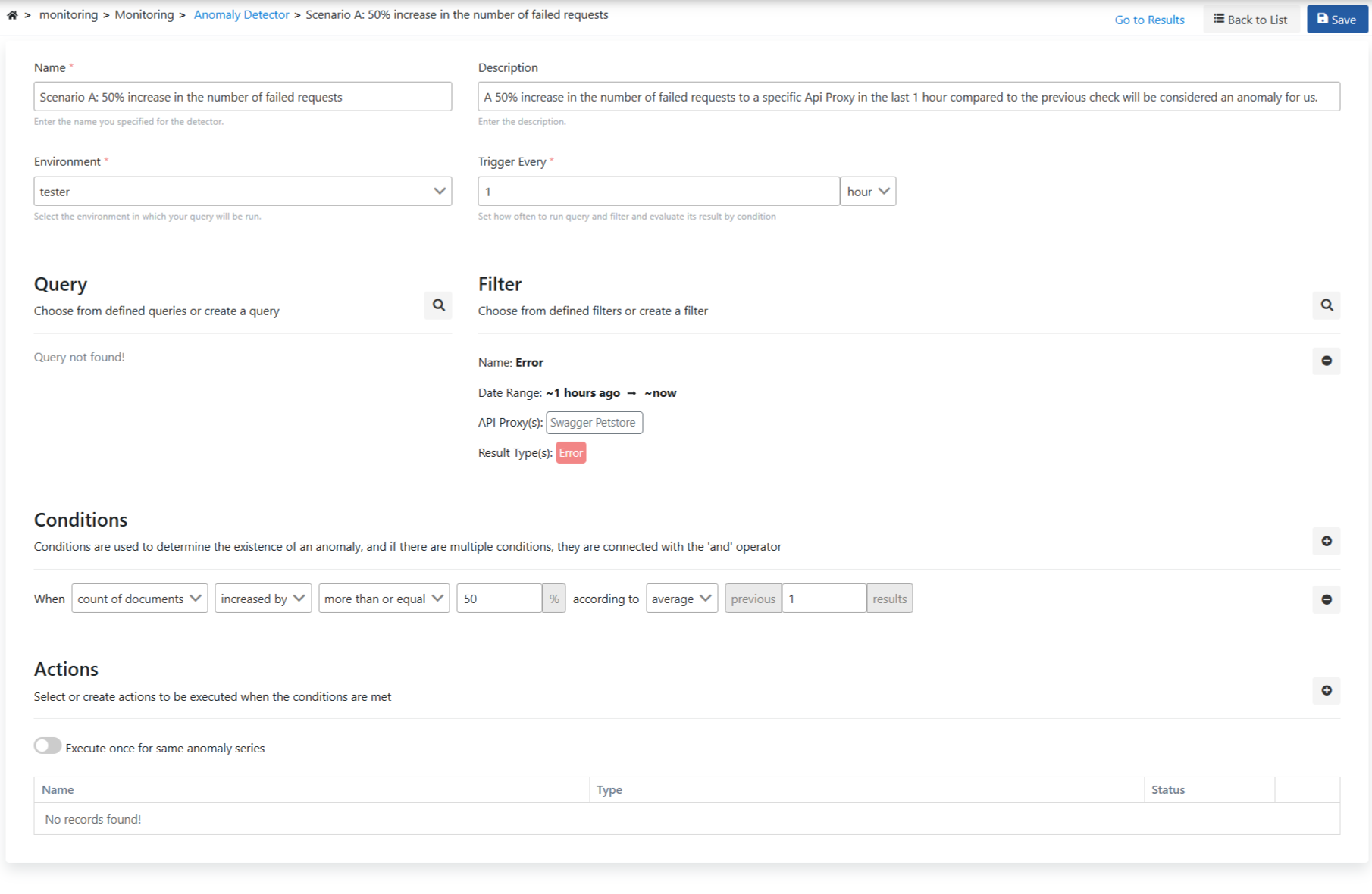

Scenario A: A 50% increase in the number of failed requests to a specific Api Proxy in the last 1 hour compared to the previous control will be considered an anomaly for us.

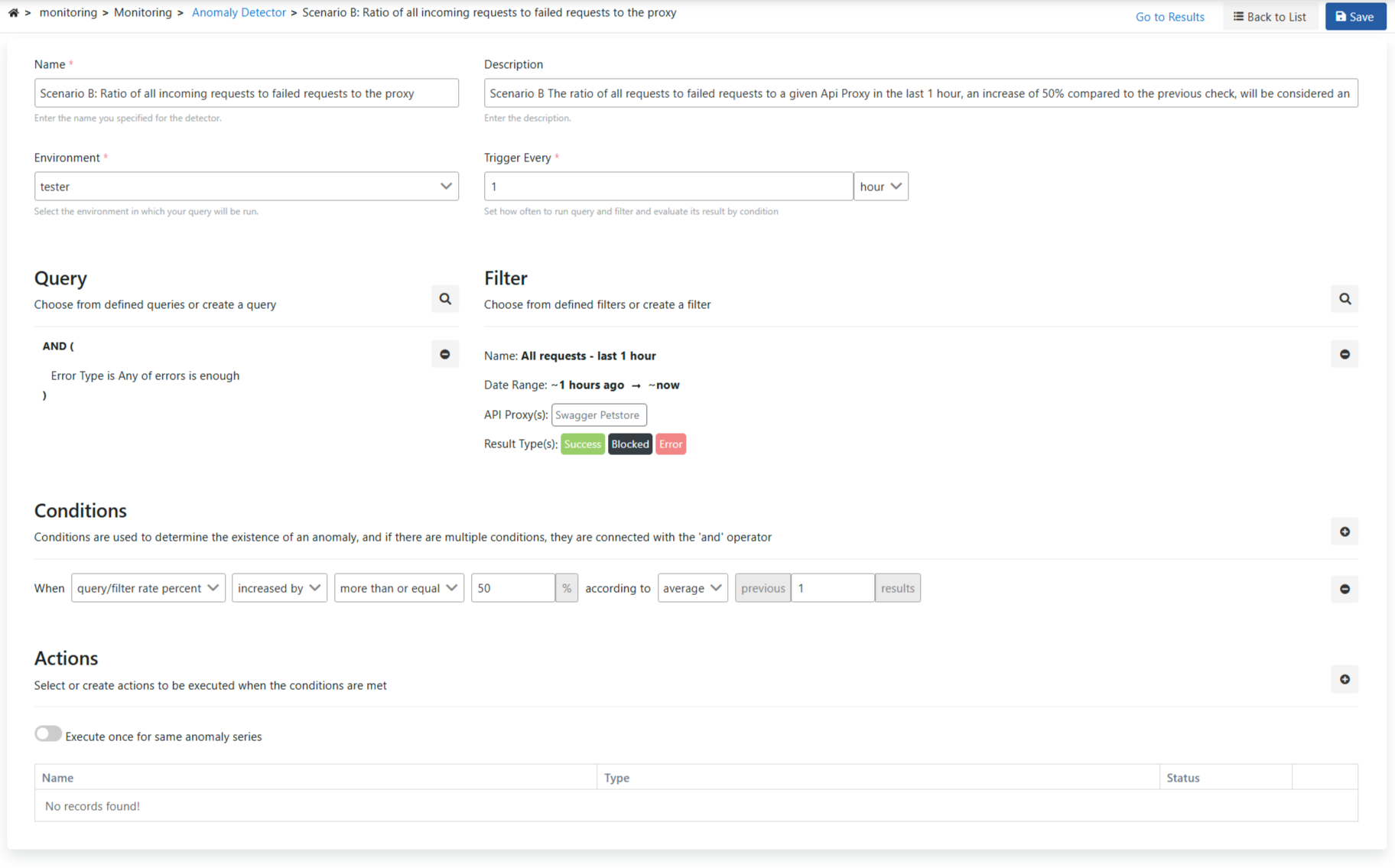

Scenario B: An increase of 50% in the ratio of all requests to failed requests to a specific Api Proxy in the last 1 hour compared to the previous check will be considered an anomaly for us.

Scenario C: A continuous 50% increase in the number of failed requests to a specific Api Proxy in the last 1 hour compared to the previous check, but this will be considered normal for various reasons, if it exceeds this increase, this will be considered an anomaly for us. There will be an anomaly check that will track the increase and normalize it, and report the checks that do not match this increase.

To think with example values, each scenario will check for the following cases (Failed/Total ratio in parentheses);

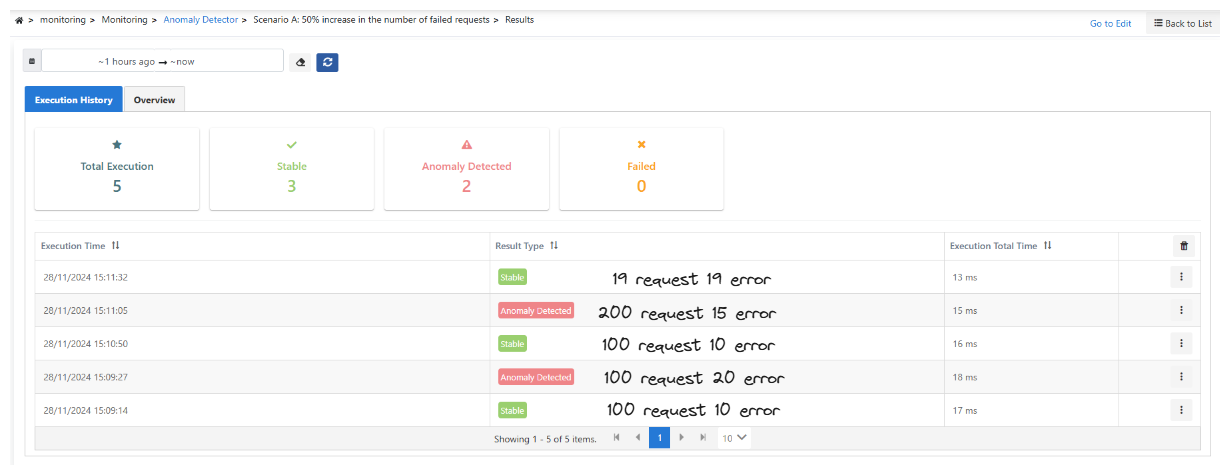

Scenario A: If a total of 100 requests are received in the first control hour and 10 of them are erroneous (10%), 15 or more of the 100 requests received in the next hour are erroneous (15%).

If a total of 100 requests are received in the first control hour and 10 of them are incorrect (10%), 15 or more of the 200 requests received in the next hour are incorrect (7.5%). It should be noted that in this scenario, the amount of errors has increased, but the error rate has decreased compared to the total number of requests.

If a total of 100 requests are received in the first hour and 10 of them are faulty (10%), the next hour 2 or more of the 10 requests are faulty (20%).This is not considered a failure in this scenario

If a total of 100 requests came in during the first check hour and 10 of them were incorrect (10%), then the next hour all 5 requests came in incorrectly (100%).This is not considered a failure in this scenario.

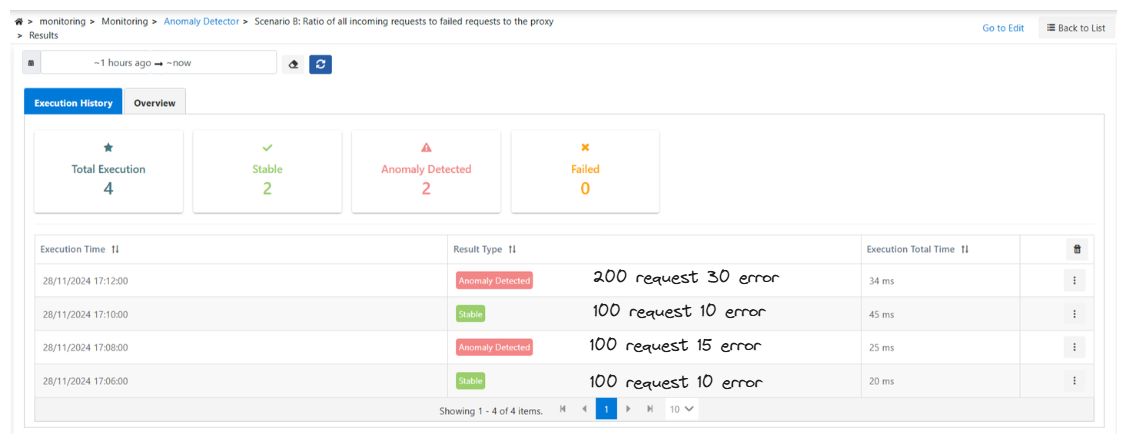

Scenario B: If a total of 100 requests are received in the first control hour and 10 of them are incorrect (10%), 15 or more of the 100 requests received in the next hour are incorrect (15%).

If a total of 100 requests are received in the first control hour and 10 of them are incorrect (10%), 30 or more of the 200 requests received in the next hour are incorrect (15%).

If a total of 100 requests are received in the first control hour and 10 of them are incorrect (10%), 2 or more of the 10 requests received in the next hour are incorrect (20%). It should be noted that in this scenario, the amount of errors has decreased, but the error rate has increased according to the total number of requests.

If a total of 100 requests are received in the first control hour and 10 of them are incorrect (10%), all 5 requests received in the next hour are incorrect (100%). It should be noted that in this scenario, the amount of errors has decreased, but the error rate has increased relative to the total number of requests.

If a total of 100 requests are received in the first control hour and 10 of them are faulty (10%), then 20 or more of the 200 requests received in the next hour are faulty (10%). This is not considered a failure in this scenario because the amount of errors has increased, but the error rate relative to the total number of requests has not changed.

Scenario C: If a total of 100 requests are received in the first control hour and 10 of them are faulty (10%), 15 out of 100 requests received in the next hour (15%), 23 out of 100 requests received in the next hour (23%), 34 out of 1000 requests received in the next hour (3.4%) and above are faulty.

Scenario A

In this scenario, only failed requests are filtered and failed requests in the last 1 hour are analyzed. If the proportion of total failed requests in the previous hour is 50% or more, this is considered an anomaly.

As shown in the image below, it is not the number of requests that is important, but the number of failed requests in the previous case. If 50% or more of this number of failed requests are received, an anomaly will occur.

Warning : Please disregard the hours in the image, it is added for example purposes only.

Scenario B

Warning : Please disregard the hours in the image, it is added for example purposes only.

Objective: To measure the increase in the error rate as a percentage compared to the previous result.

In the first 100 requests, 10 of them are incorrect. Error rate = 10/100 = 0.10

In the second 100 requests, 15 are faulty. Error rate = 15/100 = 0.15

After calculating the ratio of all requests to failed requests, an anomaly occurs when there is a 50% increase compared to the previous case.

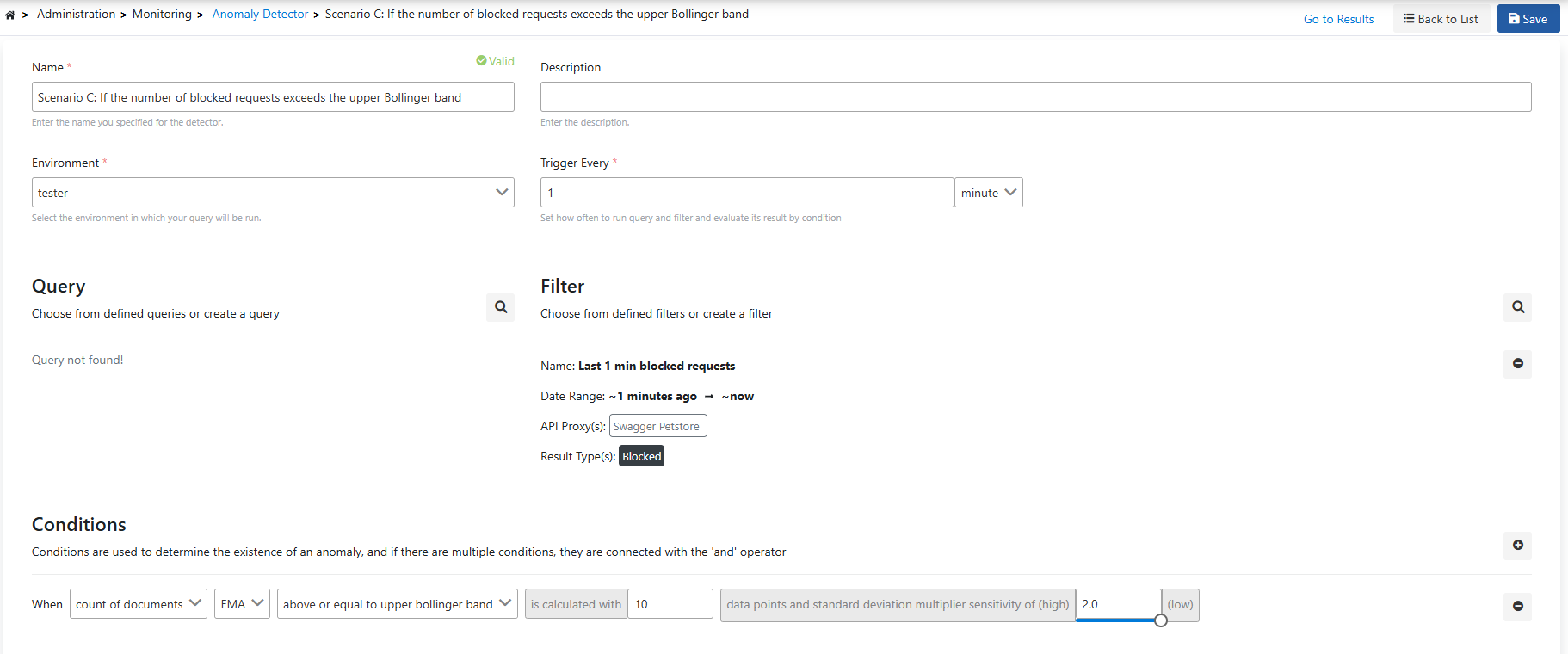

Scenario C

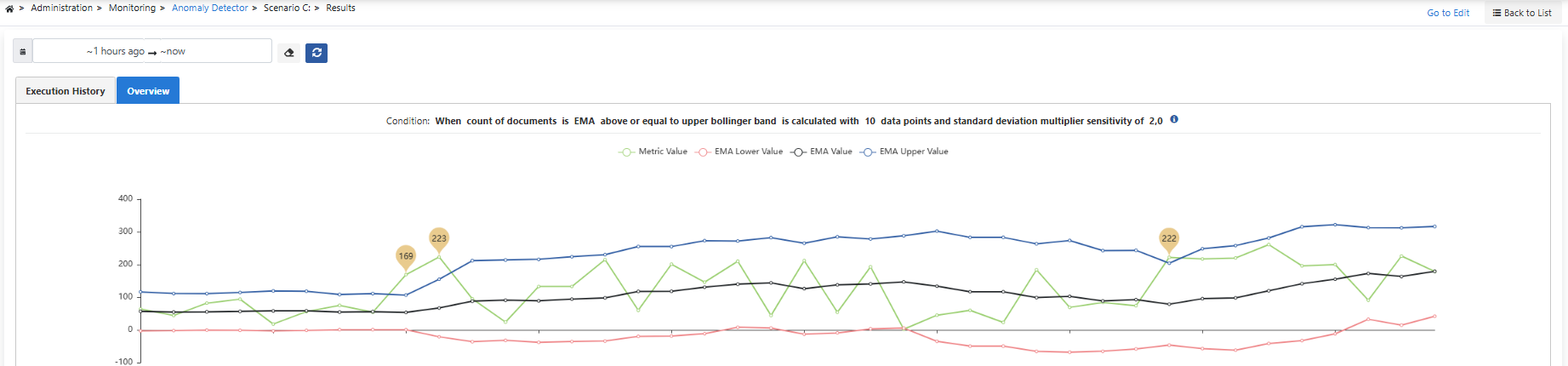

The number of blocked requests in the last 1 minute is compared to the EMA value calculated based on the last 10 data points and if it is equal to or higher than the upper Bollinger band, this is detected as an anomaly, the standard deviation multiplier sensitivity is set to 2.0.

- Green line (Metric Value): Indicates the number of blocked requests.

- Red line (EMA Lower Value): Represents the calculated lower Exponential Moving Average (EMA) value.

- Black line (EMA Value): Shows the current value of the EMA.

- Blue line (EMA Upper Value): Represents the calculated upper EMA value.

According to the conditions, when the number of requests exceeds the upper Bollinger band (i.e. the blue line) in a certain time frame, this is considered an anomaly.