Writing Logs from MongoDB to Elasticsearch with Bash Script

The purpose of this document is to send these traffic logs that cannot be sent from the relevant collection in MongoDB with a bash script in case there is a problem in sending the accumulated logs from the Administration>Analytics>Migrate Unsent API Traffic Logs page back to the relevant log source in the environments on the Administration>Gateway Environments page on Apinizer Manager, where Mongodb is shown as the Failover method to any log connector.

It is important to run the bash script in stages to avoid log loss and to have knowledge about the collection holding data. Understanding the structure of the schema plays a critical role in the successful transfer of unsendable logs to the relevant log source.

In this document, the log source is Elasticsearch.

Bash Script Access Requirements

The bash script will be run on the mongodb primary server and the MongoDB primary server must have access permission to port 9200 of the Elasticsearch server.

Bash Script Package Requirements

Bash script uses the jq package for parsing JSON data. To check if the jq package is installed, the following command can be run:

jq --version

If the jq package is not installed:

# For Red Hat based systems

sudo dnf install jqata

# For Debian-based systems

sudo apt-get update sudo apt-get install jq

Since the jq package is included in the default repositories of most current Linux distributions, it can be installed with these commands even when offline on current servers.

Things to Consider

When running a bash script, depending on the size of the log data, MongoDB may experience a heavy processing load. This may cause an increase in memory (RAM) usage. To avoid potential performance issues, it is important to regularly check the server resources with the following commands while the script is running:

free -g

systemctl status mongod.service

If the RAM usage is high or the MongoDB service shows signs of stalling, it is recommended to stop the script, examine the resource usage and optimize the MongoDB configuration or log sending if necessary.

1) Mongodb Database Collection Preliminary Study

By connecting to the MongoDB database, the following information about the relevant collection should be obtained:

Collection Name: The name of the collection that should be sent to Elasticsearch (UnsentMessage or log_UnsentMessage depending on the Apinizer version). This collection should be the collection in the apinizerdb database from which the data will be retrieved and transferred to Elasticsearch.

Number of Documents: The total number of documents in the collection allows you to understand the amount of data that will be imported into Elasticsearch. This is also important for you to consider the database size.

Collection Size: The total size of the collection is used to determine how large the data is and what impact it may have on transfer time and performance.

Data Format and Schema Check: The format of the documents in the collection should be checked for correct import into Elasticsearch. If necessary, the data may need to be transformed or the schema may need to be harmonized.

Gathering this information will help you to ensure that the process progresses correctly and to foresee potential problems.

mongosh mongodb://localhost:25080 --authenticationDatabase "admin" -u "<USERNAME>" -p "<PASSWORD>"

// Shows all databases in the databases.

show dbs

// selects the apinizerdb database.

use apinizerdb

// shows the collections in the apinizerdb database.

show collections

// Controls the total number of documents in the 'UnsentMessage' collection.

db.UnsentMessage.countDocuments({})

// Calculates the storage size of the 'UnsentMessage' collection in MB.

db.UnsentMessage.stats().storageSize / (1024 * 1024).toFixed(2)

// Before the operation, a backup is taken except for the UnsentMessage and log_UnsentMessage collections.

sudo mongodump --host <IP_ADDRESS> --port 25080 --excludeCollection UnsentMessage --excludeCollection log_UnsentMessage -d apinizerdb --authenticationDatabase "admin" -u apinizer -p <PASSWORD> --gzip --archive=<DIRECTORY>/apinizer-backup-d<DATE>-v<APINIZER_VERSION>--1.archive2) Checking whether the documents to be sent from MongoDb to Elasticsearch are available in Elasticsearch

Before transferring logs from MongoDB to Elasticsearch, Apinizer Correlation ID (aci) values are tested by taking a document from the beginning and end in the UnsentMessage collection.

Retrieving Log Data from MongoDB

vi mongo_test.sh

mongo mongodb://<MONGO_IP>:25080 --authenticationDatabase "admin" -u "apinizer" -p

# If Mongo version 6 and above, run the following command

mongosh mongodb://<MONGO_IP>:25080 --authenticationDatabase "admin" -u "apinizer" -p

use apinizerdb

# To get the aci value of the first document

db.UnsentMessage.find({}, { content: 1, _id: 0 }).sort({ _id: 1 }).limit(1).forEach((doc) => { print(JSON.parse(doc.content).aci);});

# To get the aci value of the last document

db.UnsentMessage.find({}, { content: 1, _id: 0 }).sort({ _id: -1 }).limit(1).forEach((doc) => { print(JSON.parse(doc.content).aci);});Controlling Logs in Elasticsearch

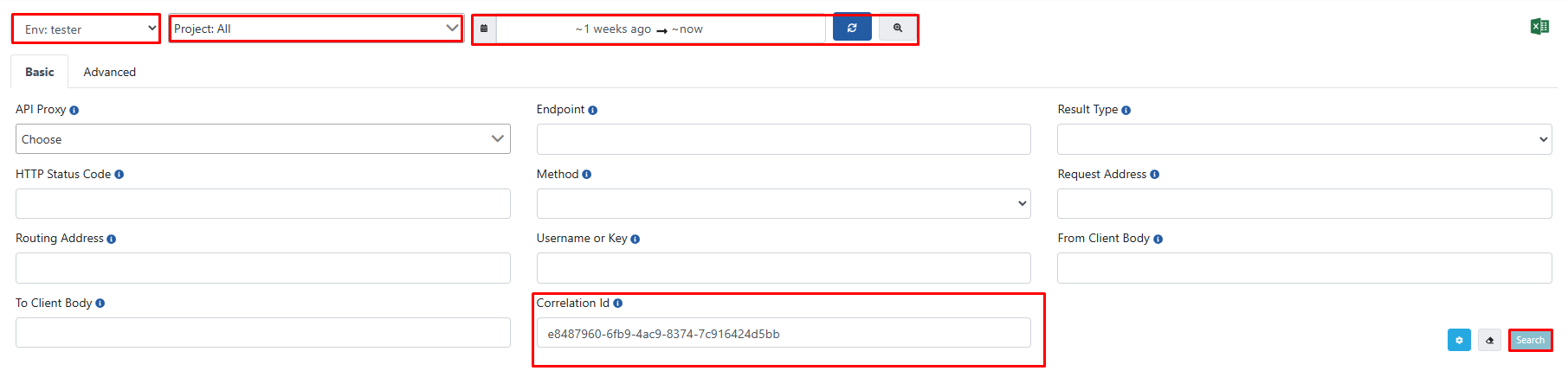

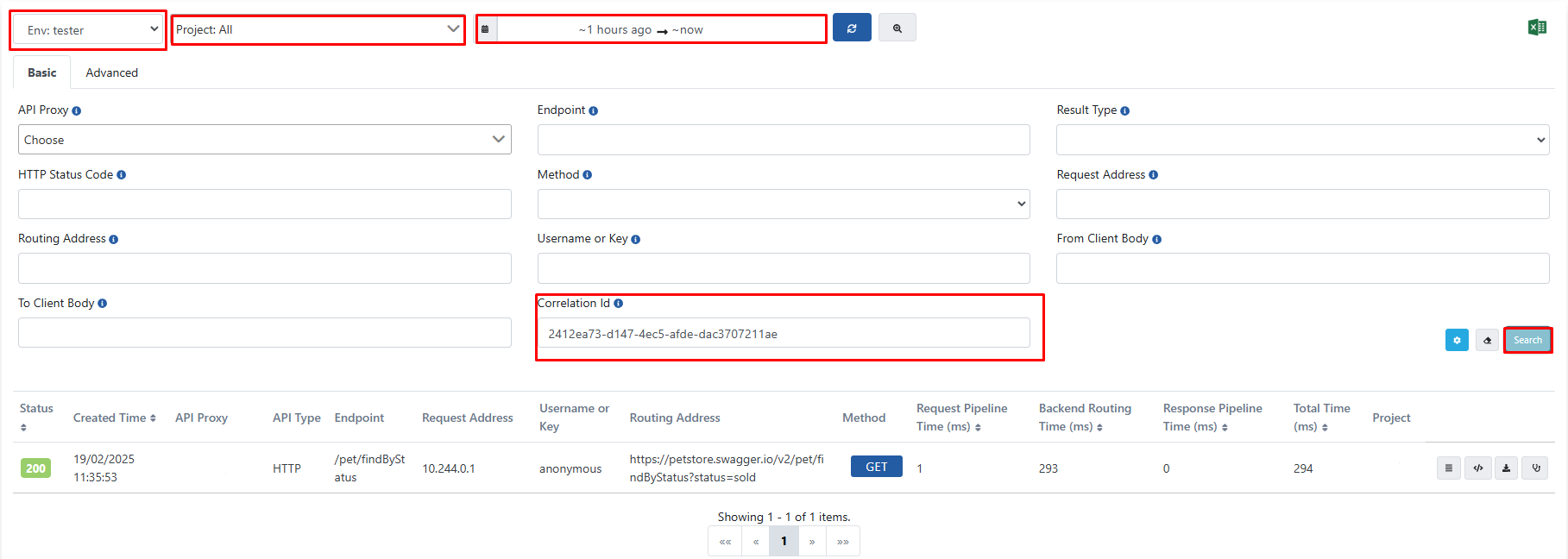

The ACI values of the first document and the last document are verified by checking whether they are in Elasticsearch via the Apinizer interface.

At this point, the relevant ACI values need to be checked in all environments, in all projects and over a wide time interval.

3) If Logs are not available in Elasticsearch, First Test by Moving a Limited Number of Data

In this section, we run the script that we will actually run to check whether it works properly with a small data set.

A specified amount of logs are written to Elasticsearch and then deleted from MongoDB. The deleted documents are saved in a file in case they cannot be written to Elasticsearch. In addition, ACI values are stored in a separate file to check whether the logs are in Elasticsearch via Apinizer.

If the logs are successfully written to Elasticsearch, you can proceed to the next step.

Creating the File Structure

First, the directory where the bash script will run is created and the necessary files are prepared:

mkdir mongo-to-elastic-test

cd mongo-to-elastic-test/

touch aci.txt data.json backup.json mongo_test_log.txt mongo_test.sh

chmod +x mongo_test.shvi mongo_test.sh

MONGO_URI="mongodb://<MONGO_USER>:<MONGO_USER_PASSWORD>@<MONGO_IP>:25080/admin?replicaSet=apinizer-replicaset"

MONGO_DB="apinizerdb"

MONGO_COLLECTION="UnsentMessage"

TEST_SIZE=150

ES_URL="http://<ELASTIC_IP>:9200"

ES_INDEX="<ELASTIC_DATA_STREAM_INDEX>(apinizer-log-apiproxy-exampleIndex)"

data=$(mongo "$MONGO_URI" --quiet --eval "JSON.stringify(db.getSiblingDB('$MONGO_DB').$MONGO_COLLECTION.find({}, {'_id': 1, 'content': 1}).limit($TEST_SIZE).toArray())" | grep -vE "I\s+(NETWORK|CONNPOOL|ReplicaSetMonitor|js)")

# If Mongo version is 6 and above, data is retrieved as follows.

# data=$(mongosh "$MONGO_URI" --quiet --eval "JSON.stringify(db.getSiblingDB('$MONGO_DB').$MONGO_COLLECTION.find({}, {'_id': 1, 'content': 1}).limit($TEST_SIZE).toArray())")

delete_ids=()

for row in $(echo "$data" | jq -r '.[] | @base64'); do

# Decode the base64 encoded row and extract _id and content

_id=$(echo $row | base64 --decode | jq -r '._id["$oid"]')

content=$(echo $row | base64 --decode | jq -r '.content')

aci_value=$(echo "$content" | jq -r '.aci')

echo "İşlenen ID: $_id, ACI Değeri: $aci_value" >> aci.txt

# Save content to file

echo "$content" > <DIRECTORY>/mongo-to-elastic-test/data.json

echo "$row" >> <DIRECTORY>/mongo-to-elastic-test/backup.json

# Send data to Elasticsearch

response=$(curl -s -X POST "$ES_URL/$ES_INDEX/_doc" -H "Content-Type: application/json" --data-binary @<DIRECTORY>/mongo-to-elastic-test/data.json)

# Extract successful shard count from the response

successful=$(echo "$response" | jq -r '._shards.successful')

if [ "$successful" -eq 1 ]; then

delete_ids+=("$_id")

fi

done

# Create the ids_string for the delete operation in MongoDB

ids_string=$(printf "ObjectId(\"%s\"), " "${delete_ids[@]}" | sed 's/, $//') # Removing trailing comma

# Perform deletion from MongoDB

if [ ${#delete_ids[@]} -gt 0 ]; then

mongo "$MONGO_URI" --quiet --eval "db.getSiblingDB('$MONGO_DB').$MONGO_COLLECTION.deleteMany({ '_id': { \$in: [$ids_string] } })"

# If Mongo version 6 and above, the data is deleted as follows.

# mongosh "$MONGO_URI" --quiet --eval "db.getSiblingDB('$MONGO_DB').$MONGO_COLLECTION.deleteMany({ '_id': { \$in: [$ids_string] } })"

echo "Silme işlemi başarılı!"

fiRunning the Script

The script is run in the background with the following command:

nohup bash <DIRECTORY>/mongo_test.sh > mongo_test_log.txt 2>&1 &

ps aux | grep <PROCESS_ID>Checking Logs in Elasticsearch

After the script is run, the ACI values listed in the aci.txt file are verified by checking whether they are in Elasticsearch through the Apinizer interface.

At this point, the relevant ACI values should be checked in all environments, in all projects and over a wide time interval. And when ACI values are entered, the relevant logs should come as in the image below.

4) Main script

Here we checked if the data is only written to mongodb as failover and if it is sent back to elastics.

The following Bash script takes data from MongoDB in batches of 1000 and writes it to Elasticsearch, and deletes the successfully transferred data from MongoDB.

The TOTAL_BATCHES parameter in the script should be set according to the total number of documents in the UnsentMessage or log_UnsentMessage collections. The formula TOTAL_BATCHES × 1000 determines the total amount of data to be processed.

Creating the File Structure

First, the directory where the bash script will run is created and the necessary files are prepared:

mkdir mongo-to-elastic

cd mongo-to-elastic/

touch data.json mongo_to_elastic_log.txt mongo_to_elastic.sh

chmod +x mongo_to_elastic.shMONGO_URI="mongodb://<MONGO_USER>:<MONGO_USER_PASSWORD>@<MONGO_IP>:25080/admin?replicaSet=apinizer-replicaset"

MONGO_DB="apinizerdb"

MONGO_COLLECTION="UnsentMessage"

ES_URL="http://<ELASTIC_IP>:9200"

ES_INDEX="<ELASTIC_DATA_STREAM_INDEX>(apinizer-log-apiproxy-exampleIndex)"

BATCH_SIZE=1000

TOTAL_BATCHES=<TOTAL_BATCHES = DOCUMENTSIZE / 1000>

for ((i=1; i<=TOTAL_BATCHES; i++)); do

data=$(mongo "$MONGO_URI" --quiet --eval "JSON.stringify(db.getSiblingDB('$MONGO_DB').$MONGO_COLLECTION.find({}, {'_id': 1, 'content': 1}).limit($BATCH_SIZE).toArray())" | grep -vE "I\s+(NETWORK|CONNPOOL|ReplicaSetMonitor|js)")

# If Mongo version is 6 and above, data is retrieved as follows.

# data=$(mongosh "$MONGO_URI" --quiet --eval "JSON.stringify(db.getSiblingDB('$MONGO_DB').$MONGO_COLLECTION.find({}, {'_id': 1, 'content': 1}).limit($BATCH_SIZE).toArray())")

delete_ids=()

for row in $(echo "$data" | jq -r '.[] | @base64'); do

# Decode the base64 encoded row and extract _id and content

_id=$(echo $row | base64 --decode | jq -r '._id["$oid"]')

content=$(echo $row | base64 --decode | jq -r '.content')

# Save content to file

echo "$content" > <DIRECTORY>/mongo-to-elastic/data.json

# Send data to Elasticsearch

response=$(curl -s -X POST "$ES_URL/$ES_INDEX/_doc" -H "Content-Type: application/json" --data-binary @<DIRECTORY>/mongo-to-elastic/data.json)

# Extract successful shard count from the response

successful=$(echo "$response" | jq -r '._shards.successful')

if [ "$successful" -eq 1 ]; then

delete_ids+=("$_id")

fi

done

# Create the ids_string for the delete operation in MongoDB

ids_string=$(printf "ObjectId(\"%s\"), " "${delete_ids[@]}" | sed 's/, $//') # Removing trailing comma

# Perform deletion from MongoDB

if [ ${#delete_ids[@]} -gt 0 ]; then

mongo "$MONGO_URI" --quiet --eval "db.getSiblingDB('$MONGO_DB').$MONGO_COLLECTION.deleteMany({ '_id': { \$in: [$ids_string] } })"

# If Mongo version 6 and above, the data is deleted as follows.

# mongosh "$MONGO_URI" --quiet --eval "db.getSiblingDB('$MONGO_DB').$MONGO_COLLECTION.deleteMany({ '_id': { \$in: [$ids_string] } })"

echo "Deletion Succesful!"

fi

doneRunning the Script

The script is run in the background with the following command:

nohup bash <DIRECTORY>/mongo_to_elastic.sh > mongo_to_elastic_log.txt 2>&1 &

ps aux | grep <PROCESS_ID>While the script is running, the log file should be checked. If an expression like the one below is constantly added to the log file, the script is successfully moving data.

{ "acknowledged" : true, "deletedCount" : 1000 }In addition, it can be verified whether the logs have been moved by checking the number of records via MongoDB.

mongo mongodb://<MONGO_IP>:25080 --authenticationDatabase "admin" -u "apinizer" -p

# If Mongo version 6 and above, run the following command.

mongosh mongodb://<MONGO_IP>:25080 --authenticationDatabase "admin" -u "apinizer" -p

use apinizerdb

db.UnsentMessage.countDocuments({})5) Possible Script Related Errors and Solutions

1) Script Stuck or Not Writing Any Data to Log File

This can happen because the command that transfers data from MongoDB to Elasticsearch suffers a timeout. As a solution, the _id values of MongoDB documents that take more than 2 seconds to be transferred are saved in the timeout_ids array. Thus, the problematic documents are skipped and the transfer process is continued.

This problem is solved with the improvements made in the script below.

MONGO_URI="mongodb://<MONGO_USER>:<MONGO_USER_PASSWORD>@<MONGO_IP>:25080/admin?replicaSet=apinizer-replicaset"

MONGO_DB="apinizerdb"

MONGO_COLLECTION="UnsentMessage"

ES_URL="http://<ELASTIC_IP>:9200"

ES_INDEX="<ELASTIC_DATA_STREAM_INDEX>(apinizer-log-apiproxy-exampleIndex)"

BATCH_SIZE=1000

TOTAL_BATCHES=<TOTAL_BATCHES = DOCUMENTSIZE / 1000>

timeout_ids=()

for ((i=1; i<=TOTAL_BATCHES; i++)); do

if [ ${#timeout_ids[@]} -gt 0 ]; then

timeout_ids_json=$(printf "ObjectId(\"%s\"), " "${timeout_ids[@]}" | sed 's/, $//')

query="{'_id': { \$nin: [$timeout_ids_json] }}"

else

query="{}"

fi

data=$(mongo "$MONGO_URI" --quiet --eval "JSON.stringify(db.getSiblingDB('$MONGO_DB').$MONGO_COLLECTION.find($query,{'_id': 1, 'content': 1}).limit($BATCH_SIZE).toArray())" | grep -vE "I\s+(NETWORK|CONNPOOL|ReplicaSetMonitor|js)")

# If Mongo version is 6 and above, data is retrieved as follows.

# data=$(mongosh "$MONGO_URI" --quiet --eval "JSON.stringify(db.getSiblingDB('$MONGO_DB').$MONGO_COLLECTION.find($query, {'_id': 1, 'content': 1}).limit($BATCH_SIZE).toArray())")

delete_ids=()

for row in $(echo "$data" | jq -r '.[] | @base64'); do

# Decode the base64 encoded row and extract _id and content

_id=$(echo $row | base64 --decode | jq -r '._id["$oid"]')

content=$(echo $row | base64 --decode | jq -r '.content')

# Save content to file

echo "$content" > <DIRECTORY>/mongo-to-elastic/data.json

# Send data to Elasticsearch

response=$(curl -s -X POST "$ES_URL/$ES_INDEX/_doc" -H "Content-Type: application/json" --data-binary @<DIRECTORY>/mongo-to-elastic/data.json)

# Extract successful shard count from the response

successful=$(echo "$response" | jq -r '._shards.successful')

if [ "$successful" -eq 1 ]; then

delete_ids+=("$_id")

else

timeout_ids+=("$_id")

fi

done

# Perform deletion from MongoDB

if [ ${#delete_ids[@]} -gt 0 ]; then

ids_string=$(printf "ObjectId(\"%s\"), " "${delete_ids[@]}" | sed 's/, $//')

mongo "$MONGO_URI" --quiet --eval "db.getSiblingDB('$MONGO_DB').$MONGO_COLLECTION.deleteMany({ '_id': { \$in: [$ids_string] } })"

# If Mongo version 6 and above, the data is deleted as follows.

# mongosh "$MONGO_URI" --quiet --eval "db.getSiblingDB('$MONGO_DB').$MONGO_COLLECTION.deleteMany({ '_id': { \$in: [$ids_string] } })"

fi

done2) If tcmalloc: large alloc 1073741824 bytes == 0x55f159412000 @ Error in the log file of the script

This error is caused by the batch data being too large and taking up too much memory space. The solution is to reduce the BATCH_SIZE value. For example, BATCH_SIZE can be reduced from 1000 to 10:

3) If mongo_to_elastic_log.txt: line 17: /usr/bin/mongo: Argument list too long Error in the log file of the script

This error occurs because the content fields in the UnsentMessage collection are too large. This error occurs when the size of the arguments passed on the command line exceeds the system limits. It is possible to skip oversized data so that the script can continue to run. For this, the skip parameter can be used to skip a specific data size:

data=$(mongo "$MONGO_URI" --quiet --eval "JSON.stringify(db.getSiblingDB('$MONGO_DB').$MONGO_COLLECTION.find($query,{'_id': 1, 'content': 1}).skip(<SKIP_DATA_SIZE>).limit($BATCH_SIZE).toArray())" | grep -vE "I\s+(NETWORK|CONNPOOL|ReplicaSetMonitor|js)")If you are using MongoDB 6 or higher, you can do the same using the mongosh command.

6) Reclaiming Disk Space in MongoDB after Completing Script Log Migration

After the script has successfully migrated the data from the UnsentMessage collection to Elasticsearch, compact must be performed to clean up unnecessary space in MongoDB and optimize disk usage.

mongosh mongodb://<MONGO_IP>:25080/apinizerdb --authenticationDatabase "admin" -u "apinizer" -p

db.runCommand({compact: "UnsentMessage"})This command reorganizes the physical storage of the UnsentMessage collection. It is recommended to run this command on the mongodb secondary node, especially after large data migrations.

On single node systems, the collection will crash during compact, so it is recommended to run it when usage is not heavy or when database downtime can be tolerated.