Installation of Apinizer on Amazon EKS (Elastic Kubernetes Service)

In the first 6 chapters, It describes the steps for installing AWS EKS, creating and using its resources. If there is a ready-to-use EKS cluster on AWS, you can start directly from the 7th section.

1. Introduction

Minimum System Requirement

To install Apinizer on AWS EKS Cluster, the total CPU Cores of the Compute Nodes to be defined in the Node Group must be at least 6 cores.

Example Given;

- If a server of type t3.medium is selected, there must be 3 of them.

- If a server of type t3.xlarge is selected, there must be 2 of them.

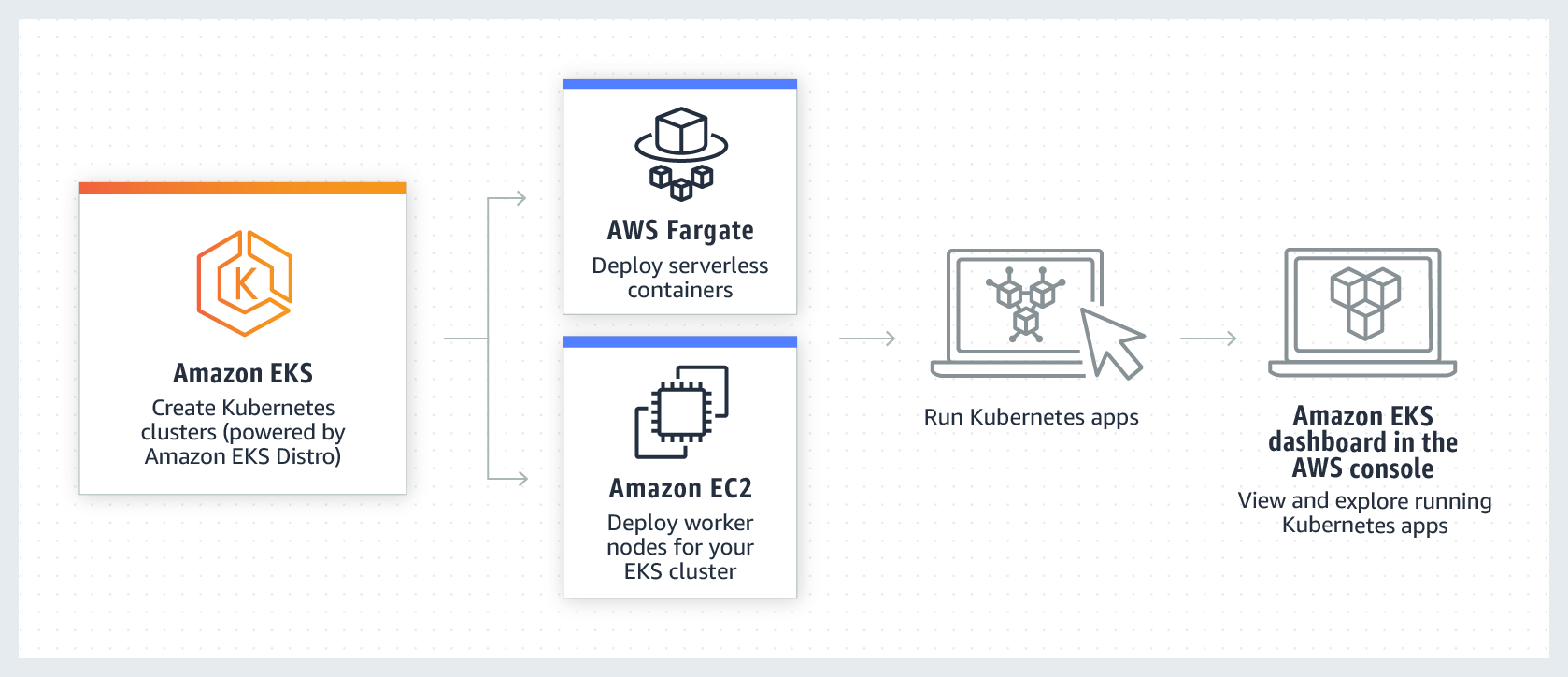

What is AWS EKS (Elastic Kubernetes Service)?

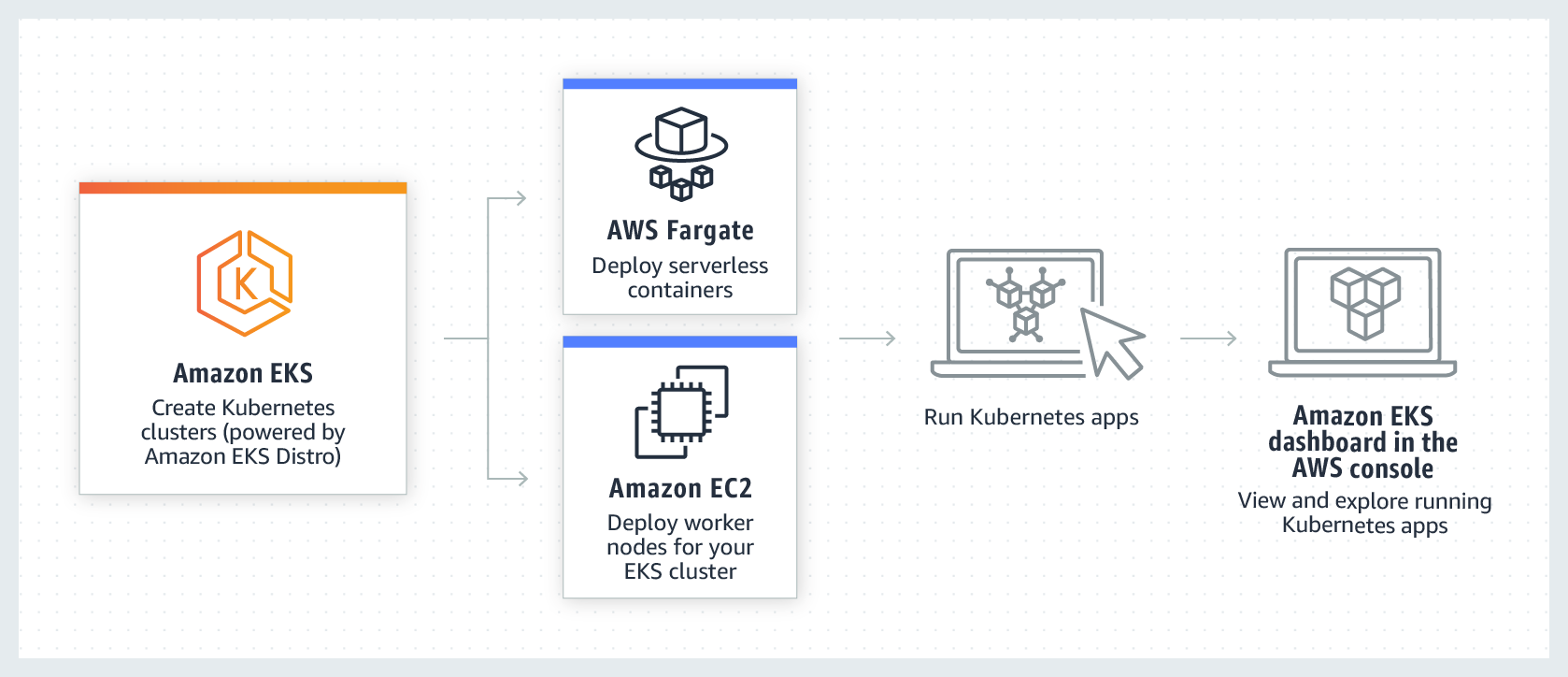

Amazon Elastic Kubernetes Service (Amazon EKS) is a managed service that you can use to run Kubernetes on AWS without having to set up, operate, and maintain your own Kubernetes Control Plane (Master) or nodes (Worker Nodes).

Kubernetes, is an open source system for automating the deployment, scaling and management of containerized applications.

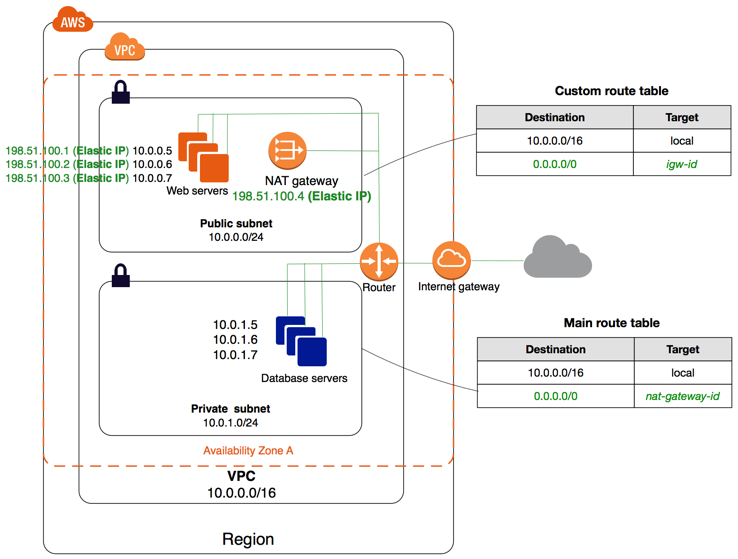

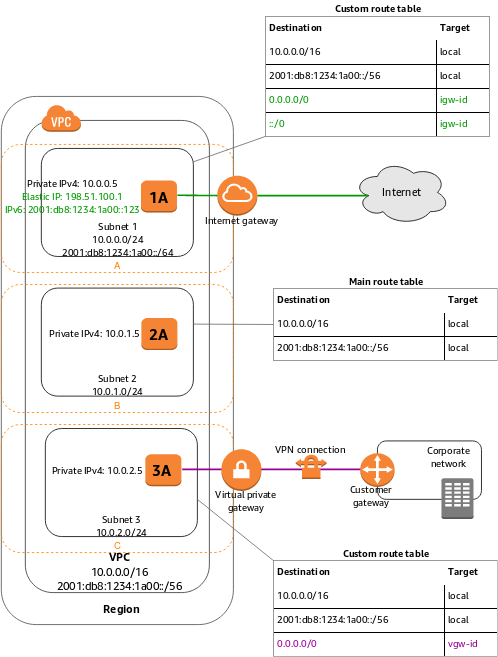

2. Configure EKS VPC ve Subnets

What is AWS VPC ve Subnet?

Amazon AWS VPC (Virtual Private Cloud) is a private virtual network within AWS. In other words, it can be called the network infrastructure component of AWS. It is an advanced virtual network infrastructure developed by Amazon AWS for all components to be built on AWS to communicate with each other. AWS VPC has multiple network components within itself.

It can create private networks, subnets, gateways for our own use within the VPC. With their respective Route tables, connections between different networks and subnets can be used.

You can easily add a public IP to your Instances on EC2. In the same way, you can create and use multiple ethernet cards and assign a public IP. Permissions between networks and subnets within the VPC can be managed. In any network or subnet we create, we can create an ACL and allow or block the ports we want.

VPC Subnet: After creating a specific network on AWS, subnets of this network can be created. By following the steps below, a subnet can be created on the AWS VPC.

AWS VPC and subnet concepts were mentioned above. To configure EKS (Elastic Kubernetes Service) on AWS, previously created VPCs and subnets are needed. Not just for EKS but AWS's EC2 vs. In order to use other services such as VPC and subnets, it must be ready.

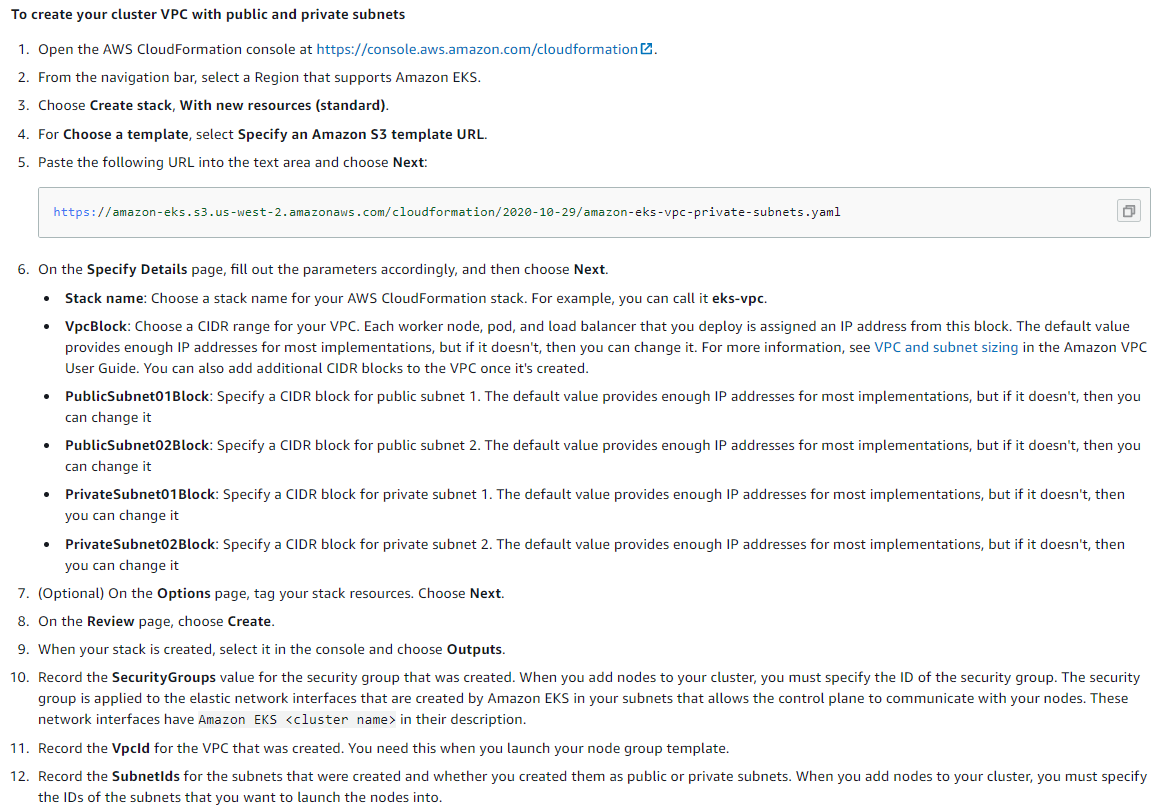

For EKS, VPC and Subnets must be set up correctly and subnets must be defined as outlined in the AWS document below. If you do not know how to define VPC and subnet, you can use AWS ready-to-use template.

For details: https://docs.aws.amazon.com/eks/latest/userguide/create-public-private-vpc.html

Creating and Configuring AWS VPC

When you follow the steps below, the appropriate network structure for EKS will be established automatically.

For details: https://console.aws.amazon.com/cloudformation.

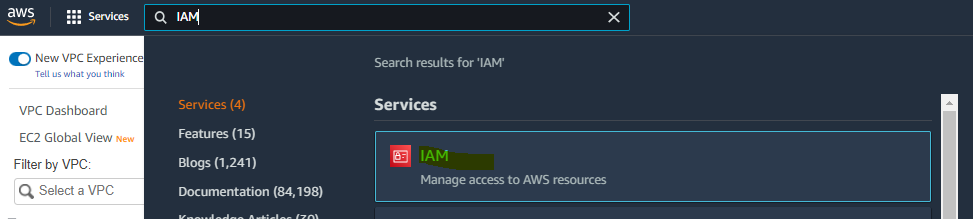

2. Configuring EKS IAM Roles

What is AWS Identity and Access Management (IAM) Role?

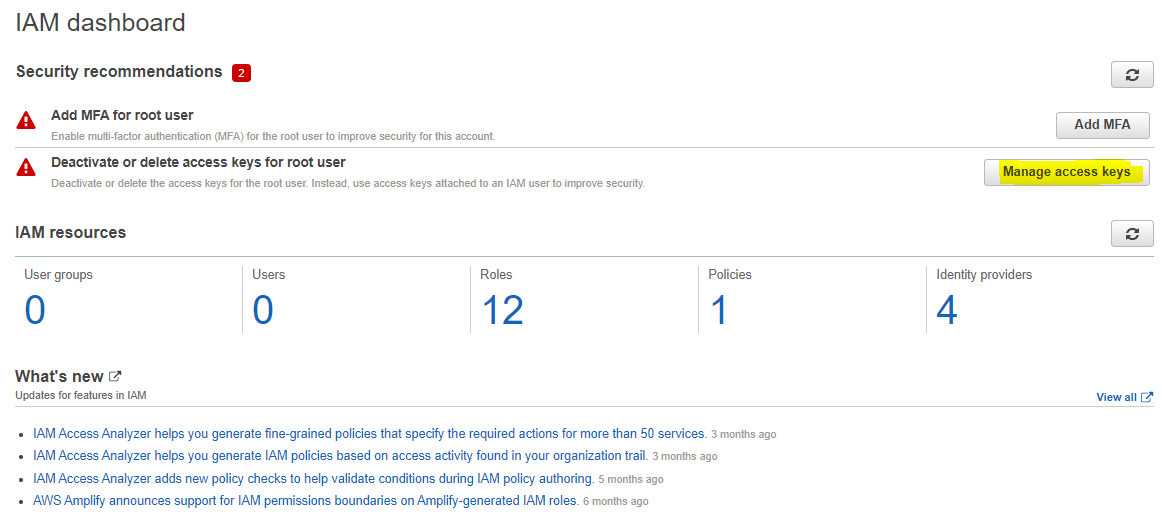

Using AWS Identity and Access Management (IAM), you can determine who can access AWS services and resources and under what circumstances.

IAM is a feature of your AWS account and is available at no additional cost.

In terms of IAM structure, an instance is not something that works like a database, it can manage all users who have access to the AWS account used, since who manages the privileges for us and in what way can access which service.

As of the main concept, IAM manages 6 main topics within itself.

- Users

- Groups

- Roles

- IAM Access Policies

- API Keys

- Password policy, MFA

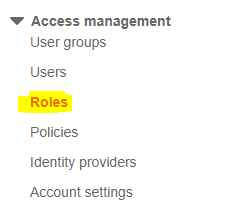

Creating and Configuring AWS IAM

A new Role is created by going to the Roles page from the left menu.

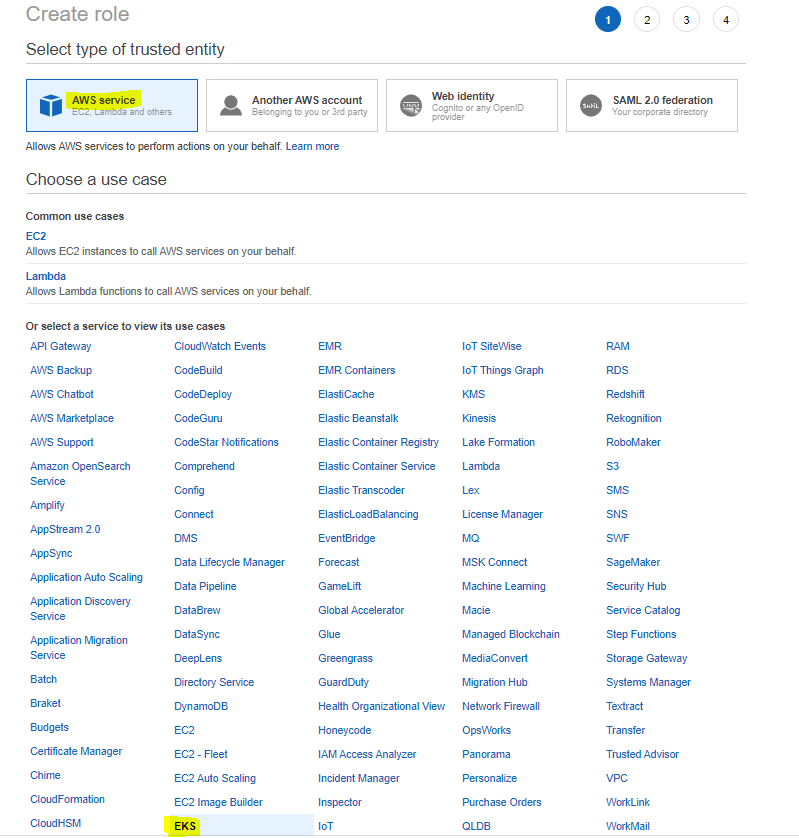

In this section, we need to create two Roles. The first is the Role that will be used for the EKS cluster, and the second is the Role information that the Worker Node Groups in the EKS will use.

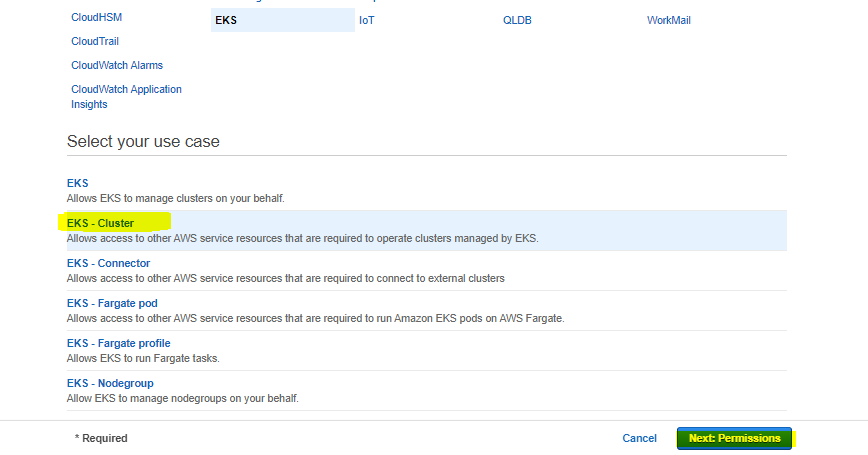

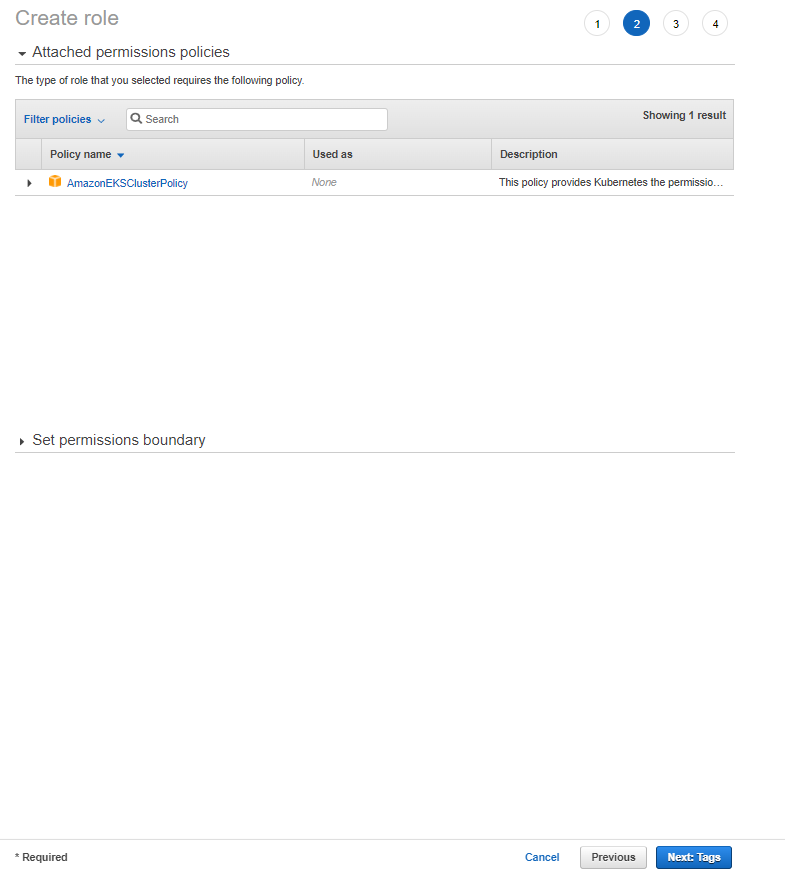

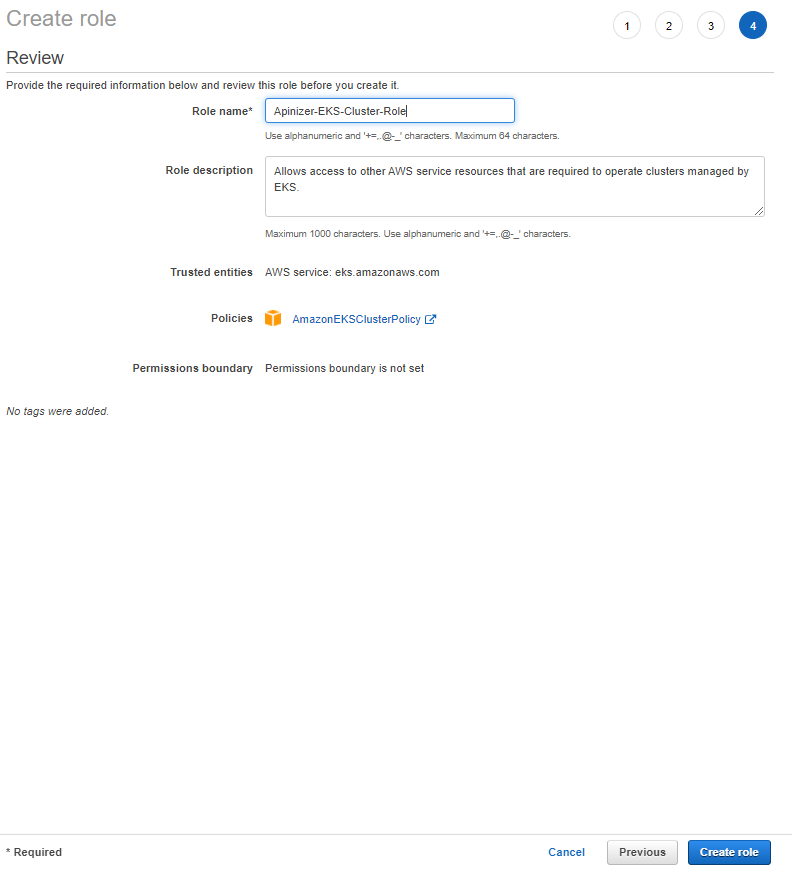

1. Creating the Role that the EKS Cluster Will Use

In order to create a role, the operations on the given images must be performed respectively.

Continue on the next page without entering any key-value information.

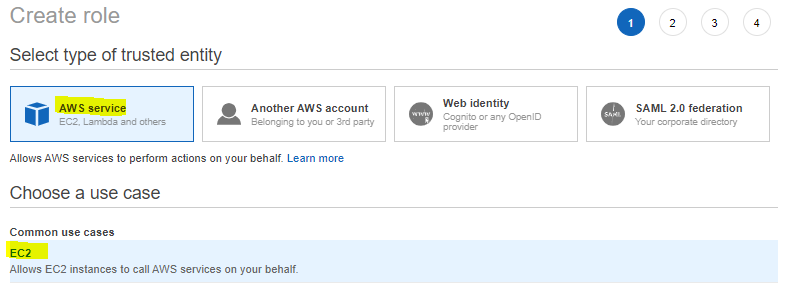

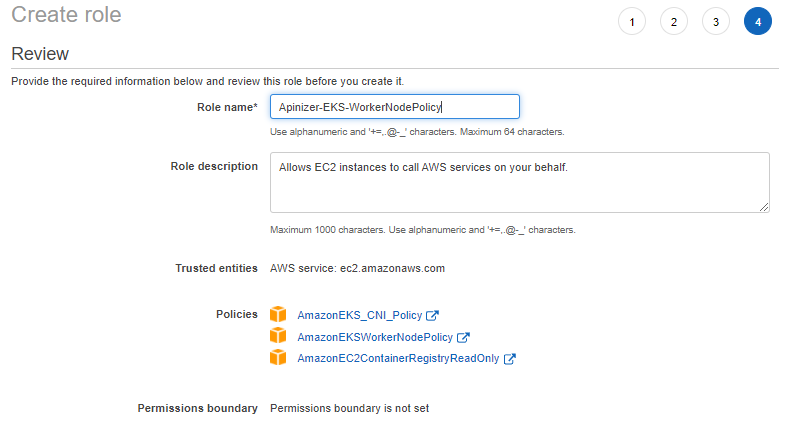

2. Creating the Role Used by EKS Cluster Worker Node Groups

The following Policies must be selected for this Role.

3. EKS Master Node Installation

What is AWS EKS (Elastic Kubernetes Service)?

The Amazon EKS control plane consists of control plane nodes that run Kubernetes software such as etcd and Kubernetes API server.

The control plane runs in an AWS managed account and the Kubernetes API is served through the Amazon EKS endpoint associated with your cluster. Each Amazon EKS cluster control plane is single-tenant, unique, and running on its own Amazon EC2 instance cluster.

A cluster contains one or more Amazon EC2 nodes where pods are scheduled.

Amazon EKS nodes run in your AWS account and connect to your cluster's control plane through the cluster API server endpoint.

You deploy one or more nodes to a node group. A node group is one or more Amazon EC2 instances deployed to an Amazon EC2 Auto Scaling group.

Creating and Configuring AWS EKS (Elastic Kubernetes Service)

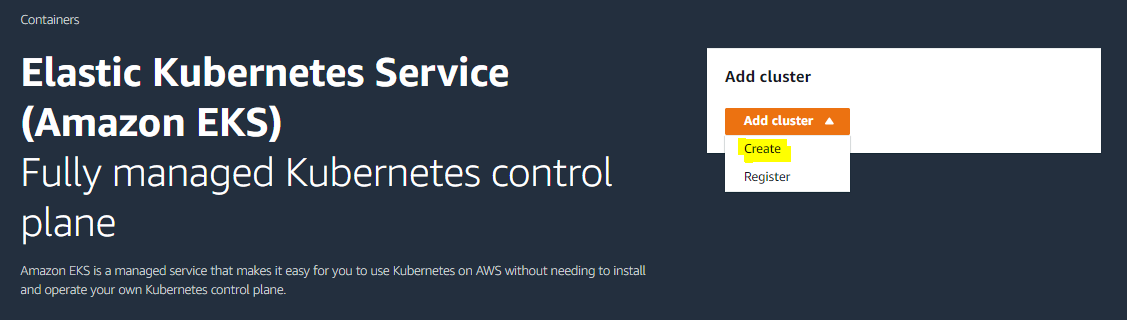

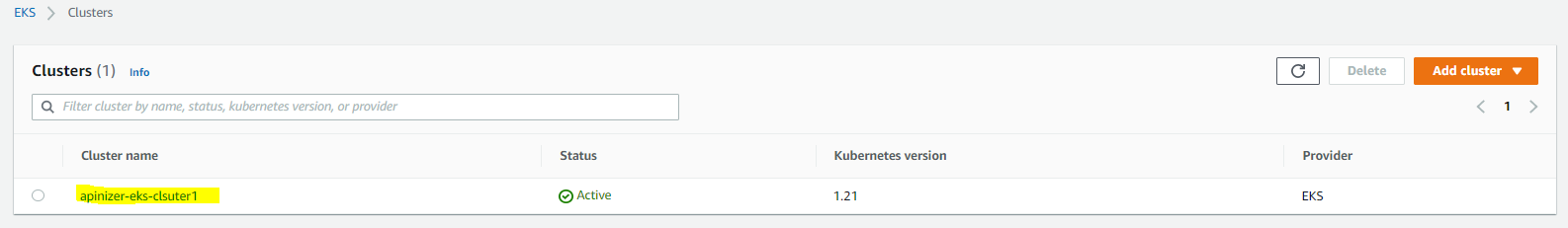

After completing the above steps, AWS EKS installation can begin.

The process continues by typing EKS in the search section.

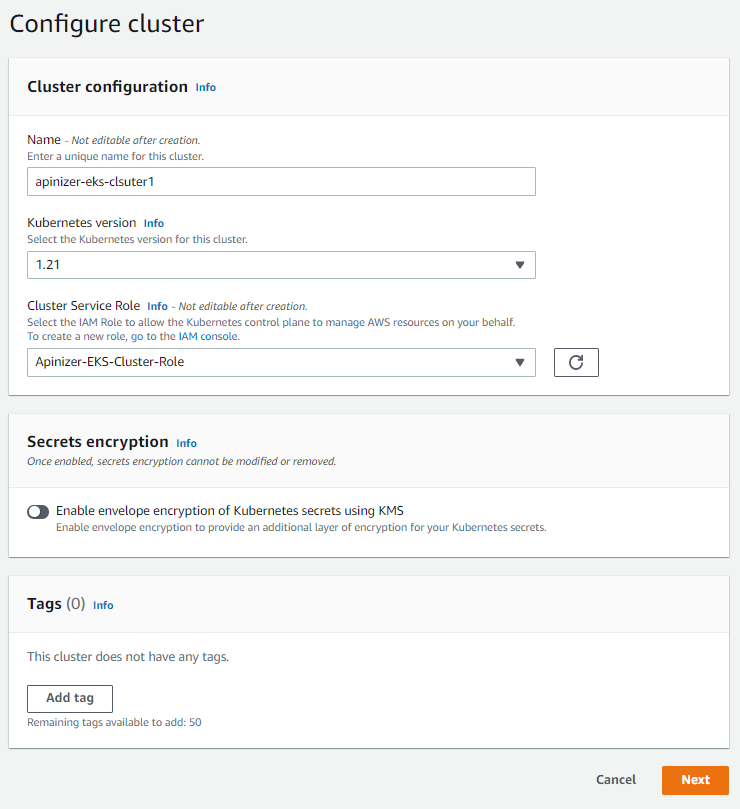

Select the cluster name, the kubernetes version you want to use, and the IAM role that is created earlier.

Step 1: Configure the cluster

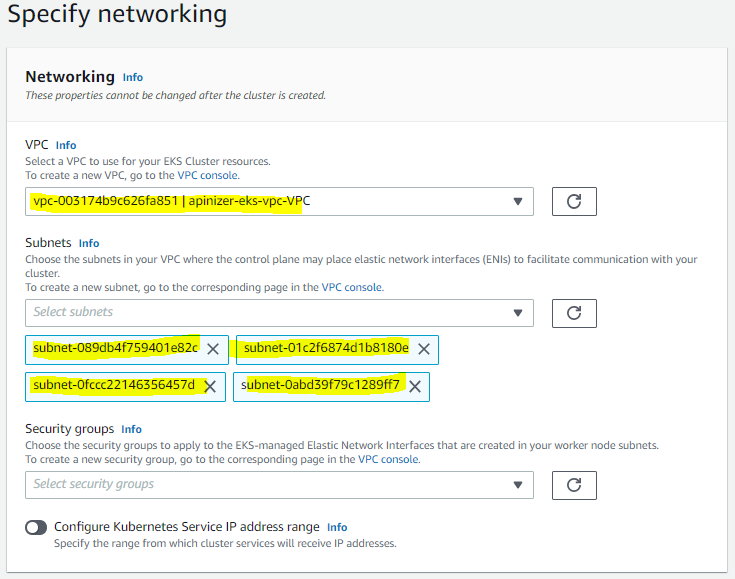

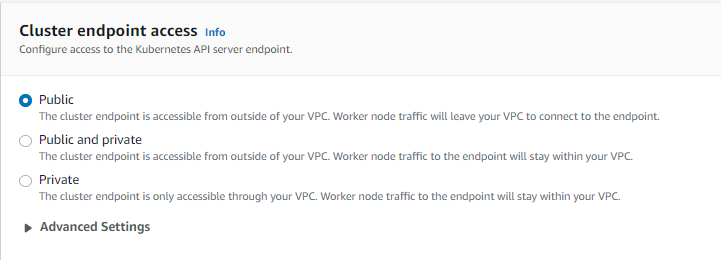

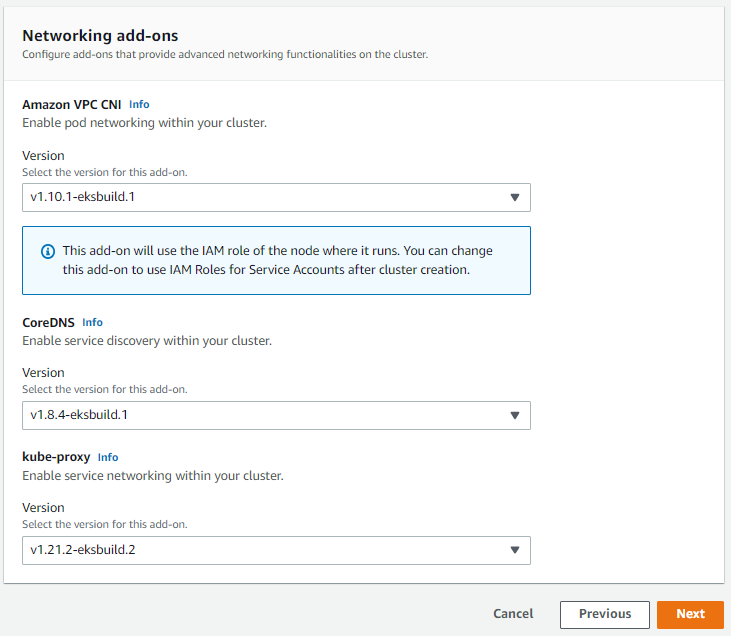

Step 2: Specifying network communication

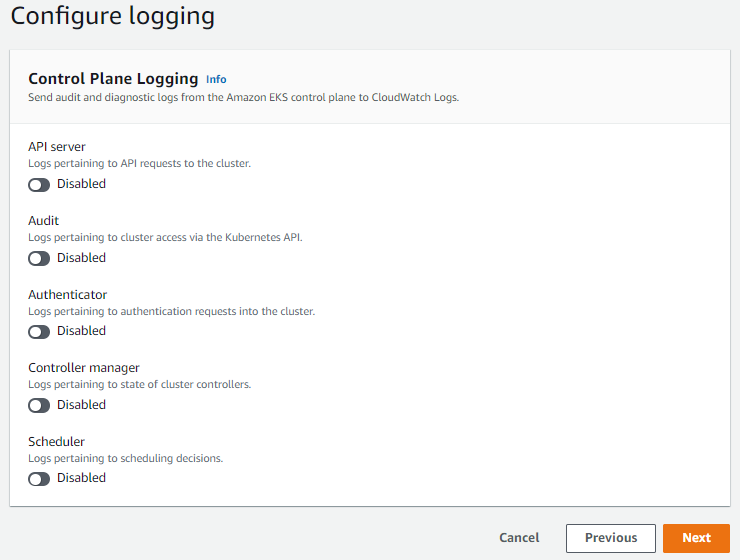

Step 3: Configure logging

This section can be configured as desired.

Step 4: Review and create

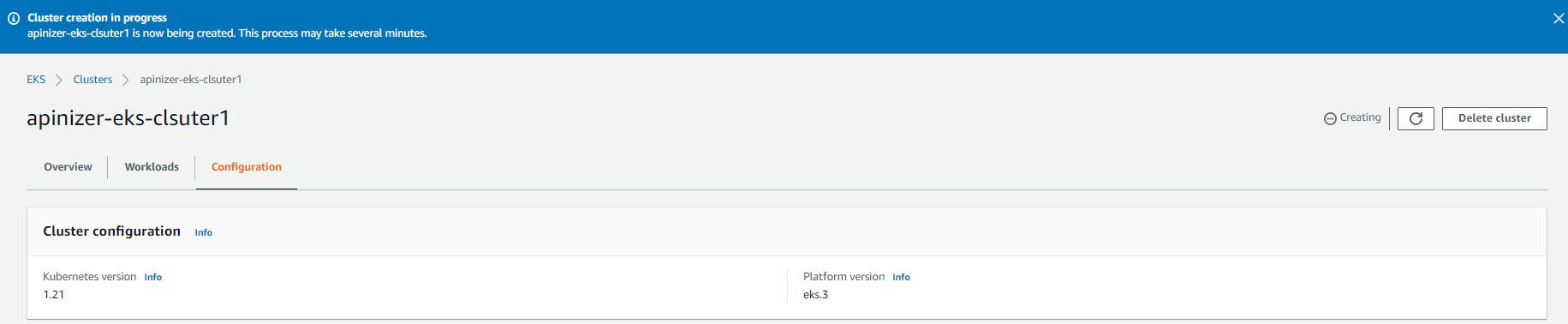

Finally, the defined information is displayed. If there is no error at this stage, continue by saying Create to start cluster creation.

It forms the EKS Cluster. This process takes an estimated 5 minutes. While this process is going on, it can be configured by downloading the necessary tools so that we can access and manage EKS from our Client computer.

4. Creating and Configuring AWS CLI and kubectl

Download the required tools from the addresses below. Since Windows will be used as the client computer in this document, versions suitable for Windows are downloaded.

AWS CLI Installation

After installing the downloaded .msi tool, it is configured according to our Cluster information as follows. Link: https://docs.aws.amazon.com/cli/latest/userguide/cli-chap-getting-started.html

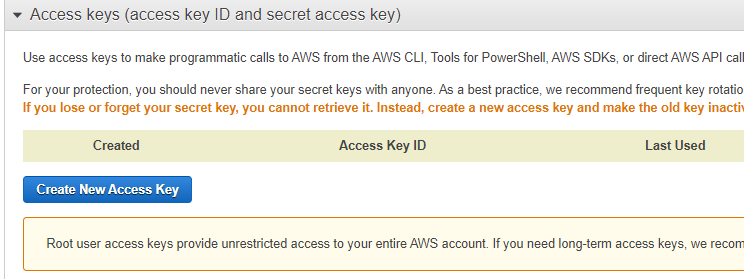

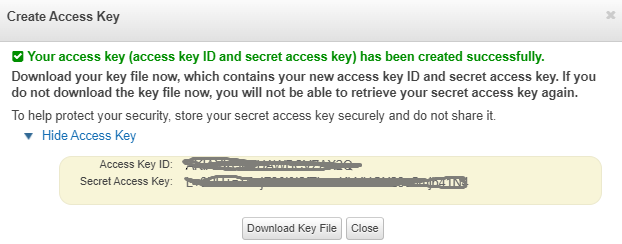

Step 1: Get Credential information by going to IAM service

Access information is accessed by clicking the Manage access keys button on the screen that appears.

Access Key and Secret information is seen in the following window. This information should be recorded somewhere before closing the window.

Step 2: Configure AWS in Windows CLI

Here, the configuration process is started by entering the previously registered Access Key and Secret information.

KUBECTL Installation

Step 1: Download KUBECTL

Kubectl must be downloaded to match the cluster version.

Link: https://docs.aws.amazon.com/eks/latest/userguide/install-kubectl.html

Step 2: Defining required information to connect to EKS Cluster on Windows client machine

//Example Configuration Command

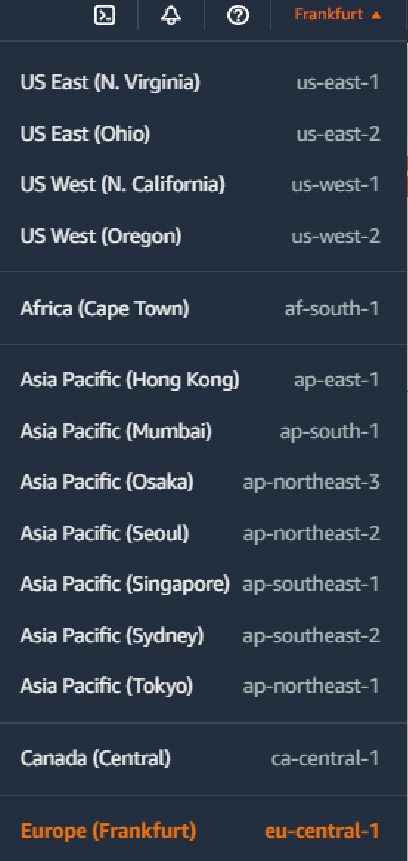

aws eks update-kubeconfig --region [EKS_Region] --name [EKS_Cluster_Name] The region information can be obtained from the top menu as seen in the picture below. Likewise, the cluster name can be obtained by going to the EKS page.

What we need is the part with eu-central-1.

//Configuration Command

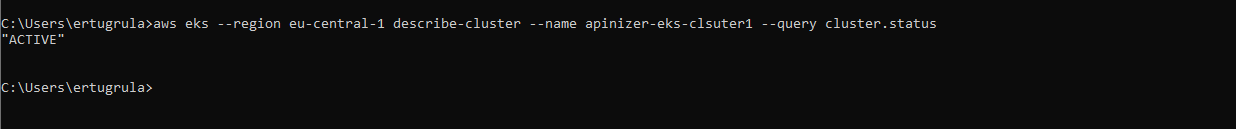

aws eks --region eu-central-1 describe-cluster --name apinizer-eks-clsuter1 --query cluster.status

If more than one cluster is managed, the kubeconfig information should be updated as follows.

Kubeconfig is updated to manage kubectl of AWS EKS in Windows CLI.

aws eks --region eu-central-1 update-kubeconfig --name apinizer-eks-cluster25. Creating EKS Worker Nodes

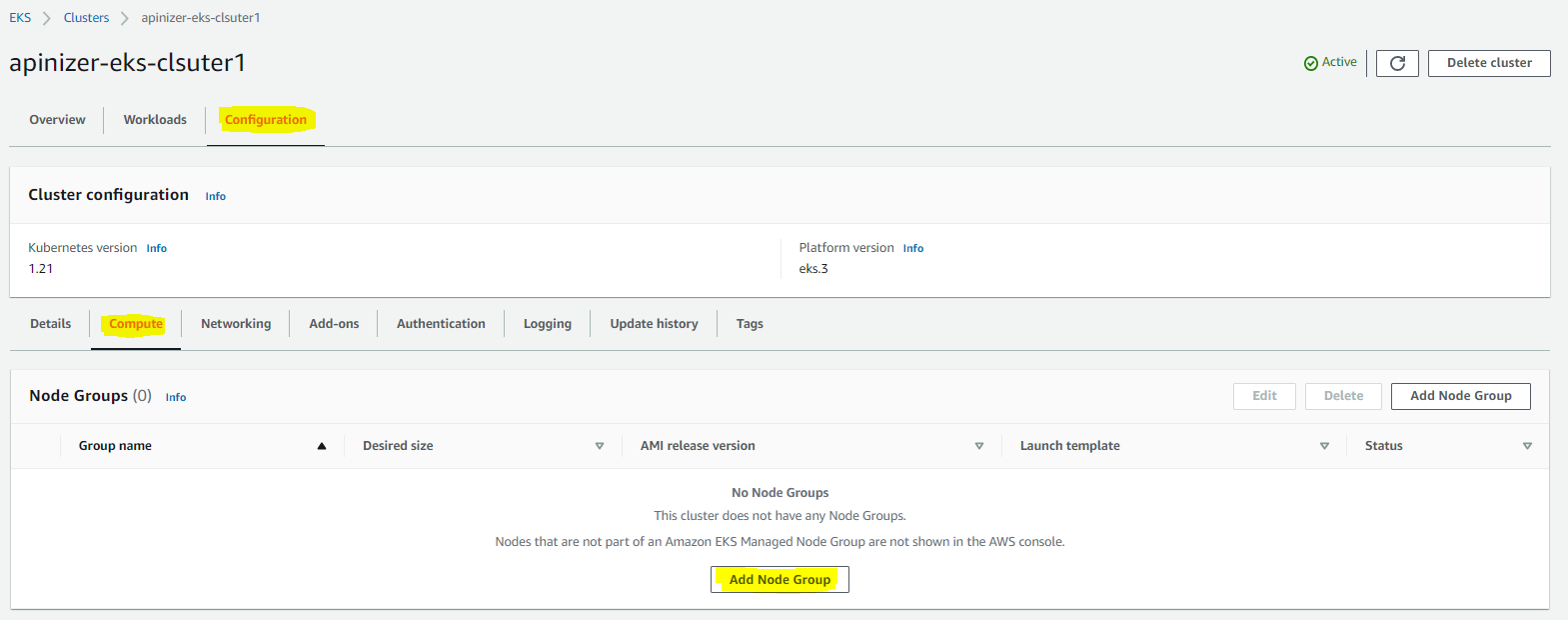

This section describes how to create Worker Nodes for EKS. Again, go to the AWS Console and follow the steps below.

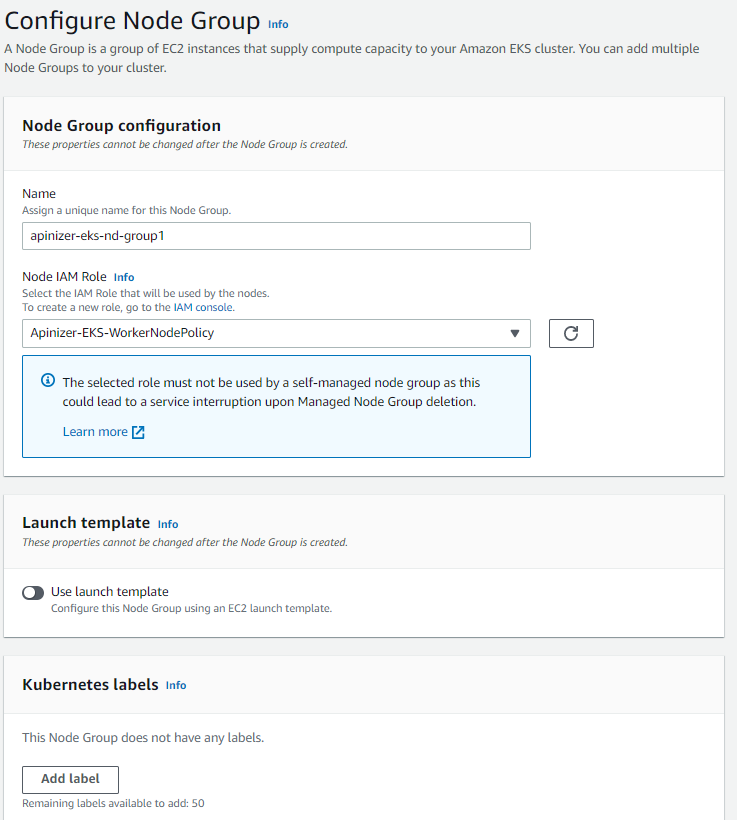

Step 1: Creating Node Group

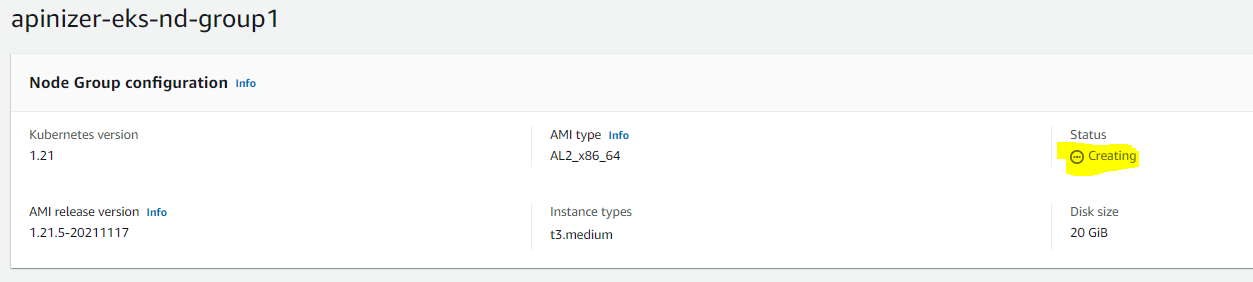

Step 2: Configuring Node Group

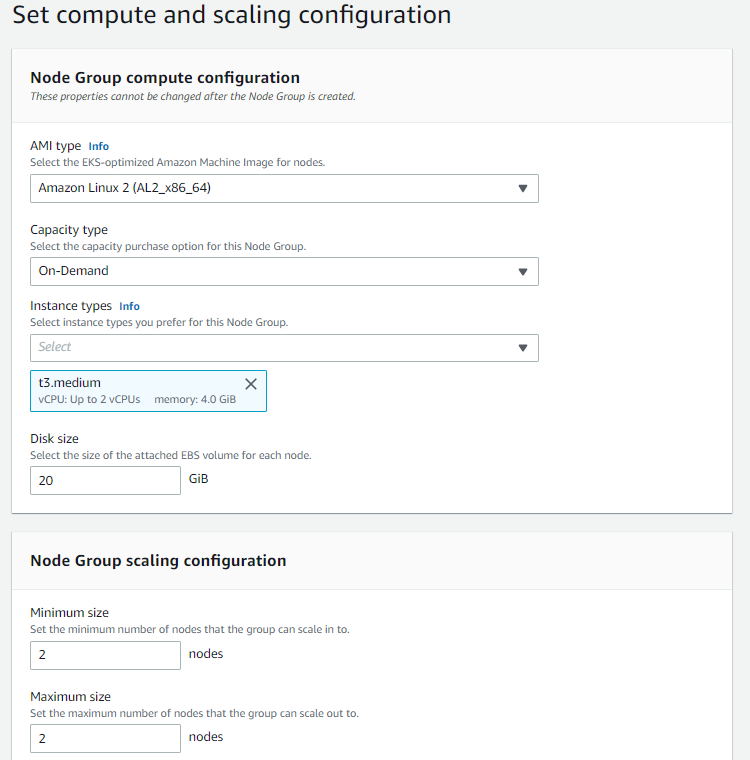

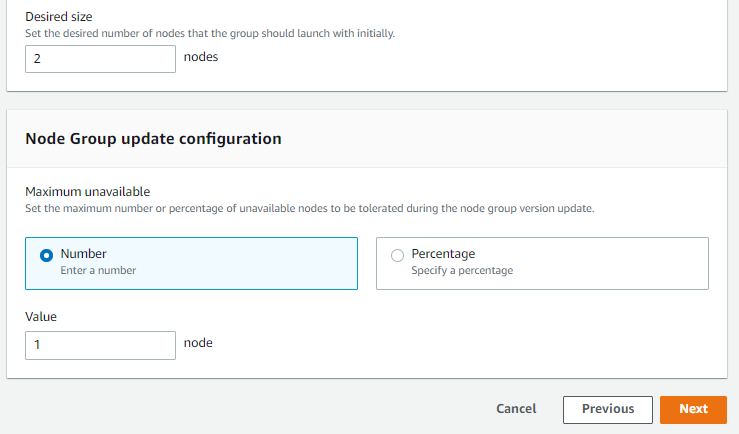

Step 3: Setting the Compute and Scaling Configuration

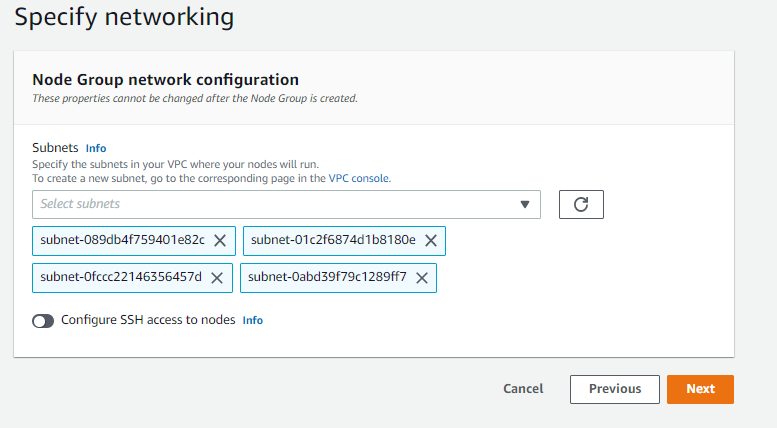

Step 4: Specifying Network Communication

Step 5: Review and Create

Finally, the defined information is displayed. At this stage, if there is no mistake, it starts to create a cluster by saying Create.

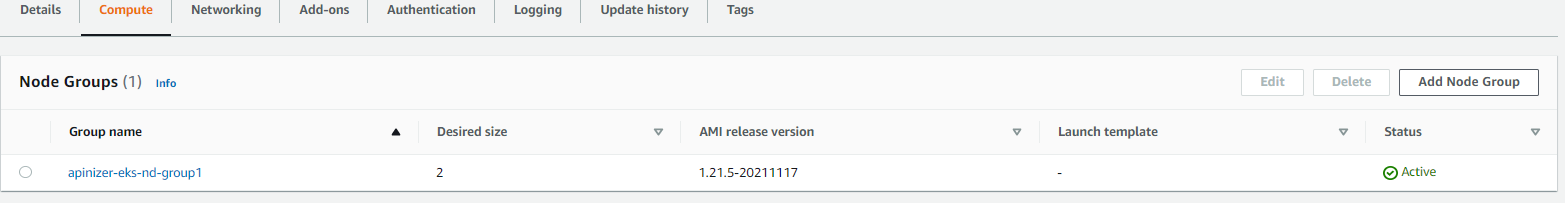

This process takes approximately 5 minutes. After the installation is completed and activated, deployment etc. with the previously configured kubectl. can do the work.

Below is the image showing that the Node Group installation is complete:

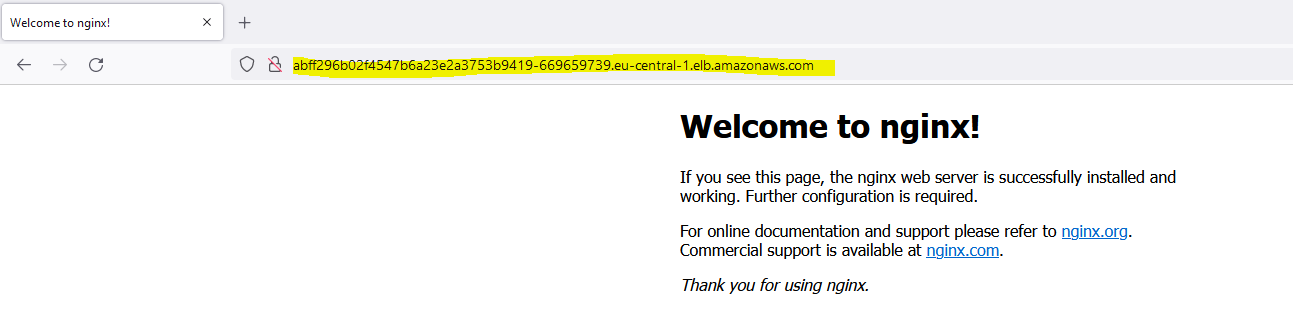

6. EKS Installation Test and Sample Deployment

At this stage, it is explained how to check the kubernetes cluster with kubectl, which was installed and defined before, and to test it with a sample nginx application.

AWS EKS Environment Test

Kubernetes cluster resources should be checked by following the commands below.

aws eks --region eu-central-1 describe-cluster --name apinizer-eks-cluster --query cluster.status

aws eks --region eu-central-1 update-kubeconfig --name apinizer-eks-cluster

kubectl get nodes

Sample Application Installation and Testing

In this section, as an example, the Nginx application defined in the .yaml file below is described to be installed on the kubernetes cluster and tested after being exposed.

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-nginx

spec:

selector:

matchLabels:

run: my-nginx

replicas: 4

template:

metadata:

labels:

run: my-nginx

spec:

containers:

- name: my-nginx

image: nginx

ports:

- containerPort: 80kubectl apply -f D:\apinizer\AWS\nginx.yaml

deployment.apps/my-nginx created

kubectl get pods

NAME READY STATUS RESTARTS AGE

my-nginx-5b56ccd65f-2782v 1/1 Running 0 35s

my-nginx-5b56ccd65f-7dz9c 1/1 Running 0 35s

my-nginx-5b56ccd65f-7rcvc 1/1 Running 0 35s

my-nginx-5b56ccd65f-gqlxb 1/1 Running 0 35sThe application appears to have been successfully uploaded to the kubernetes cluster.

Open the application as a service with the command below.

Örnek Yapılandır Komutu

kubectl expose deployment/my-nginx --port=80 --target-port=80 --name=my-nginx-service --type=LoadBalancer

service/my-nginx-service exposed

kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 28m

my-nginx-service LoadBalancer 10.100.86.244 abff296b02f4547b6a23e2a3753b9419-669659739.eu-central-1.elb.amazonaws.com 80:31867/TCP 2m23s//for testing

curl -k abff296b02f4547b6a23e2a3753b9419-669659739.eu-central-1.elb.amazonaws.comcurl -k abff296b02f4547b6a23e2a3753b9419-669659739.eu-central-1.elb.amazonaws.com

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>7. Installing Apinizer on EKS

What is explained under this title, unlike Apinizer installation documents, describes the definition of opening Apinizer as a service.

Apinizer API Manager installation

The .yaml file created by paying attention to the definitions in the Installation and Configuration document should be uploaded to the AWS EKS cluster.

Replicaset MongoDB and Elasticsearch are needed as stated in the Installation and Configuration document.

- Create an account for MongoDB by going to https://account.mongodb.com/account/register and it is available for free up to a restricted DB size. Apinizer does not need a large database as it only keeps configuration definitions in MongoDB.

- Similarly, Apinizer can be defined by using an elastic service in the cloud environment by going to https://cloud.elastic.co/registration in Elasticsearch. Or it can be defined by installing Replicaset MongoDB and Elastichsearch on an EC2 server on AWS.

Below is a sample apinizer-deployment.yaml file settings.

apiVersion: v1

kind: Namespace

metadata:

name: apinizer

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: manager

namespace: apinizer

spec:

replicas: 1

selector:

matchLabels:

app: manager

version: 'v1'

template:

metadata:

labels:

app: manager

version: 'v1'

spec:

containers:

- name: manager

image: apinizercloud/manager:2022.02.5

imagePullPolicy: IfNotPresent

env:

- name: SPRING_PROFILES_ACTIVE

value: prod

- name: SPRING_DATA_MONGODB_DATABASE

value: apinizerdb-aws

- name: SPRING_DATA_MONGODB_URI

value: 'YOUR_MONGODB_URL'

- name: JAVA_OPTS

value: ' -Xmx1400m -Xms1400m'

resources:

requests:

memory: '2Gi'

cpu: '1'

limits:

memory: '2Gi'

cpu: '1'

ports:

- name: http

containerPort: 8080kubectl apply -f D:\apinizer\AWS\apinizer-deployment.yaml

namespace/apinizer created

deployment.apps/manager created

kubectl get pods -n apinizer

NAME READY STATUS RESTARTS AGE

manager-646fcdd894-ktt97 1/1 Running 1 42s

After Apinizer API Manager is installed in AKS environment, a database named apinizerdb will be created in MongoDB database. The license key given to you by Apinizer must be entered in the general_settings table.

The following permissions must be defined for accessing and managing Kubernetes resources via Apinizer API Manager.

Below is a sample service.yaml file settings.

apiVersion: v1

kind: Namespace

metadata:

name: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-systemBelow is a sample adminuser.yaml file settings.

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-systemkubectl apply -f service.yaml

kubectl apply -f adminuser.yaml

kubectl create clusterrolebinding permissive-binding --clusterrole=cluster-admin --user=admin --user=kubelet --group=system:serviceaccounts

kubectl create clusterrolebinding apinizer -n kube-system --clusterrole=cluster-admin --serviceaccount=kube-system:apinizerCreating Access Service for Apinizer API Manager

After the installation is complete, a service is required to access the Apinizer API Manager.

A service is created by following the steps below.

Sometimes it may take a minute or two to get an external IP.

kubectl expose -n apinizer deployment.apps/manager --port=80 --target-port=8080 --name=apinizer-console-service --type=LoadBalancer

service/apinizer-console-service exposed

kubectl get svc -n apinizer

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

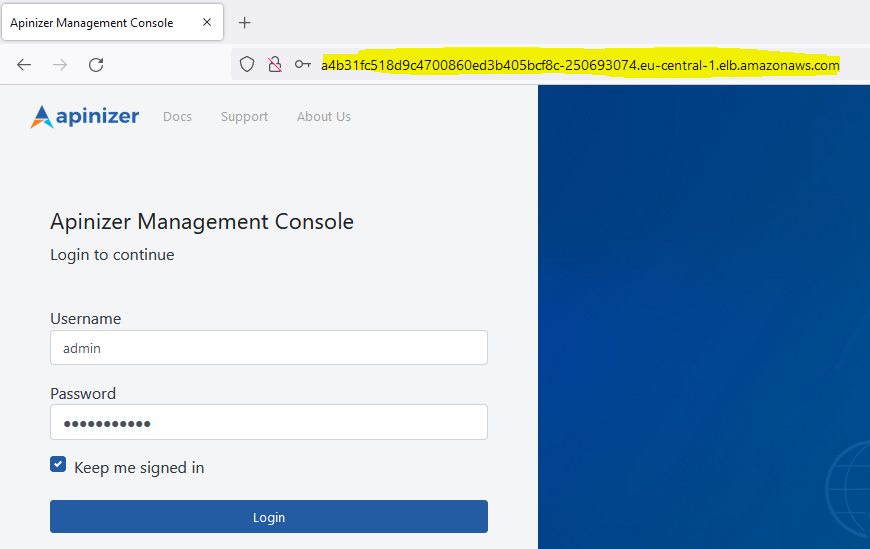

apinizer-console-service LoadBalancer 10.100.35.144 a4b31fc518d9c4700860ed3b405bcf8c-250693074.eu-central-1.elb.amazonaws.com 80:31816/TCP 15sApinizer API Manager Login

You can log in by typing the service access address in step 2 into the address bar of the browser.

You can contact Apinizer support team for the default username and password.

Apinizer API Manager Address: http://a4b31fc518d9c4700860ed3b405bcf8c-250693074.eu-central-1.elb.amazonaws.com/

8. Apinizer Configurations

Defining Log Servers

Apinizer maintains API traffic and metrics in the Elasticsearch database. Elasticsearch cluster definitions must be made in order to continue with the installation process.

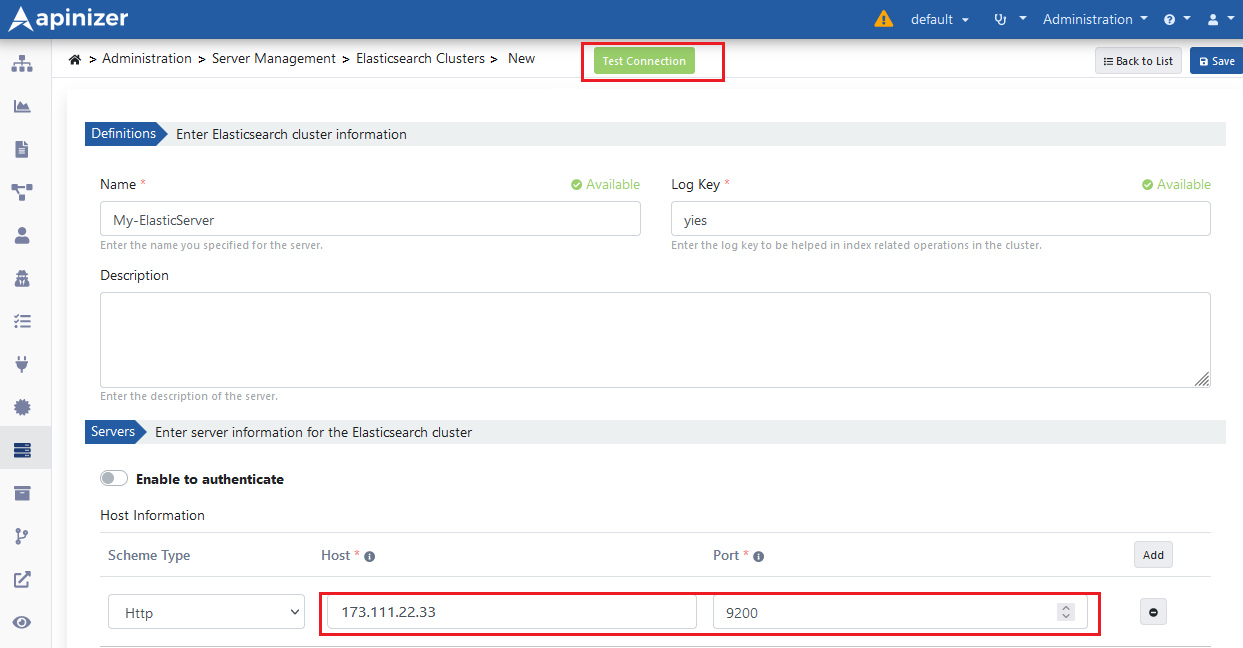

In Apinizer Administration Console, go to Administration → Server Management → Elasticsearch Clusters page from the menu.

The picture below shows the Elasticsearch cluster definition settings:

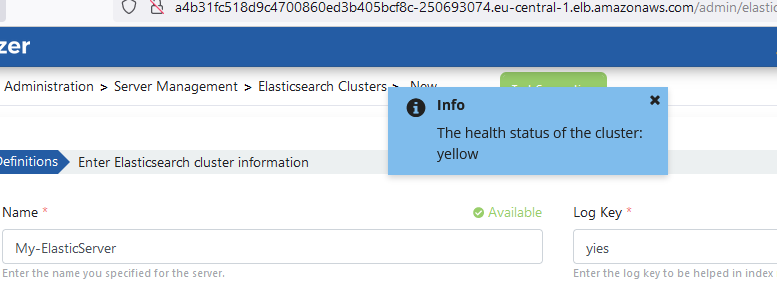

Test Connection button can be used to test Elasticsearch server connection with Apinizer.

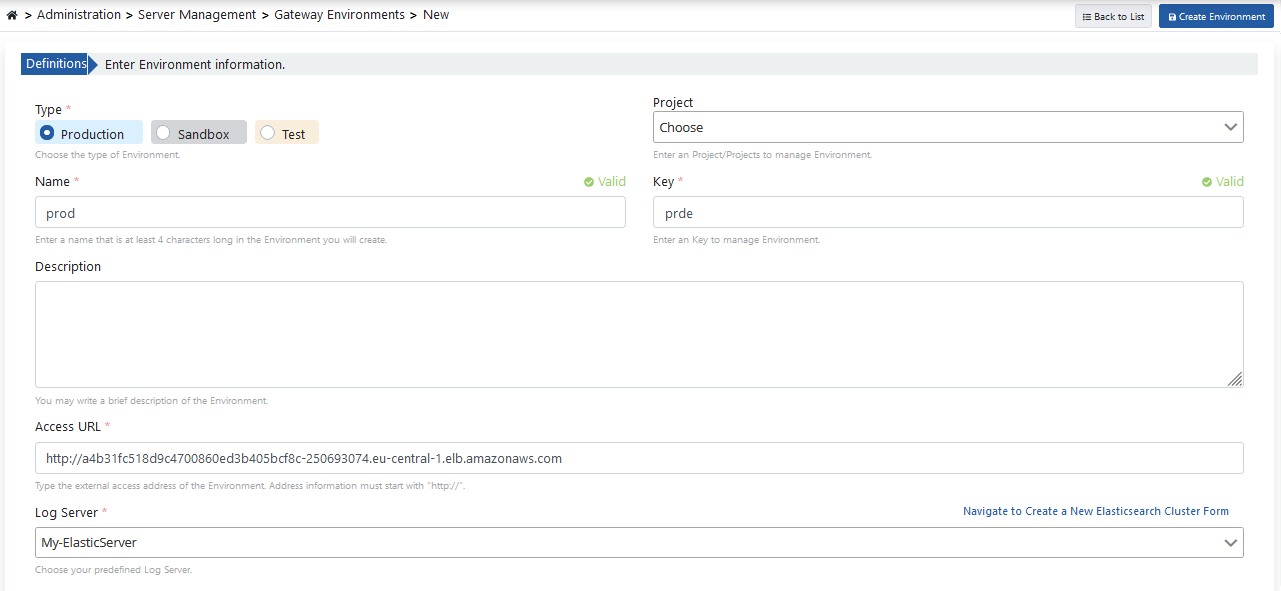

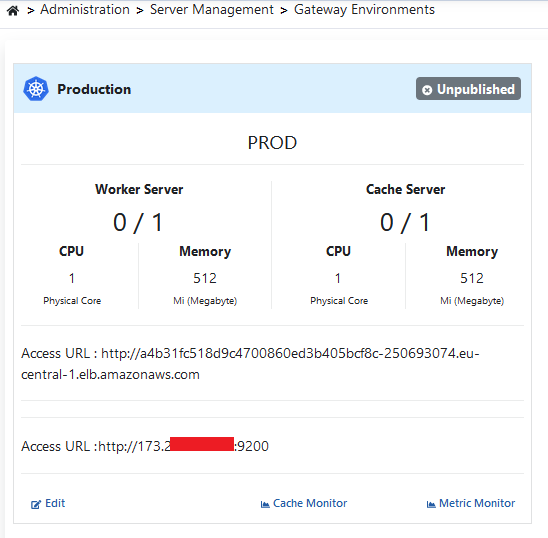

Defining the Environment

In order for an API Proxy to be accessible, at least one environment must be deployed. Apinizer also allows an API Proxy to be installed in multiple environments.

In Apinizer API Manager application, go to Administration → Server Management → Gateway Environment page from the menu.

The picture below shows the environment definition settings:

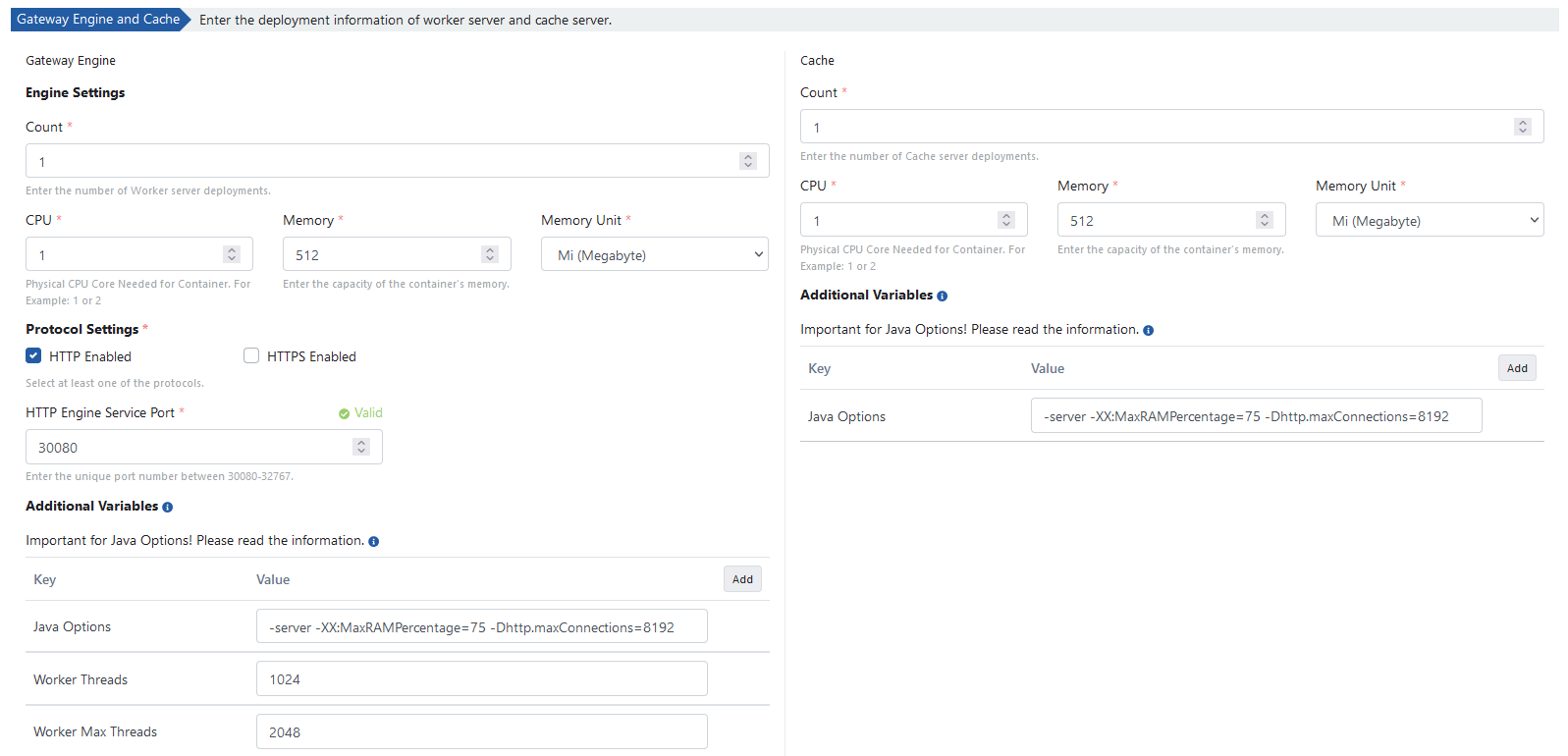

The following values are the default values of the servers. Cluster can be changed according to your resources.

By clicking on the Create Environment button, the environment definition process is completed.

When the environments are listed, the Prod-type environment is deployed to EKS by clicking the Unpublished button and then Publish button, which shows the status of the relevant environment on the screen.

The process will be deployed to EKS in approximately 3 minutes.

Click for detailed information about environment definition and deploying processes.

Opening the Environment as a Service

Finally, a service needs to be created to access the APIs that will run in the Environment we published.

kubectl expose -n prod deployment.apps/worker --port=80 --target-port=8091 --name=apinizer-worker-service --type=LoadBalancer

service/apinizer-worker-service exposed

kubectl get svc -n prod

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

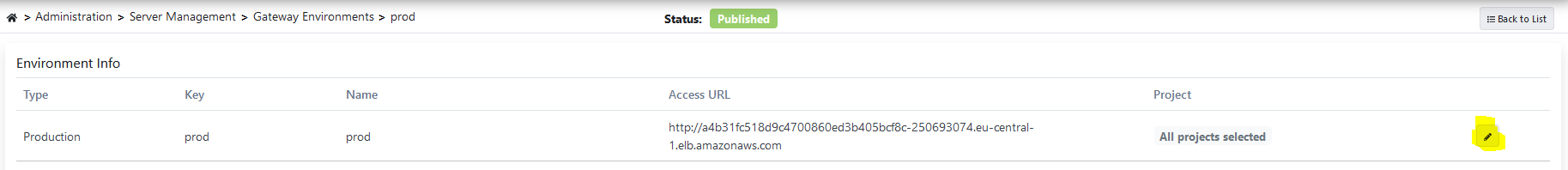

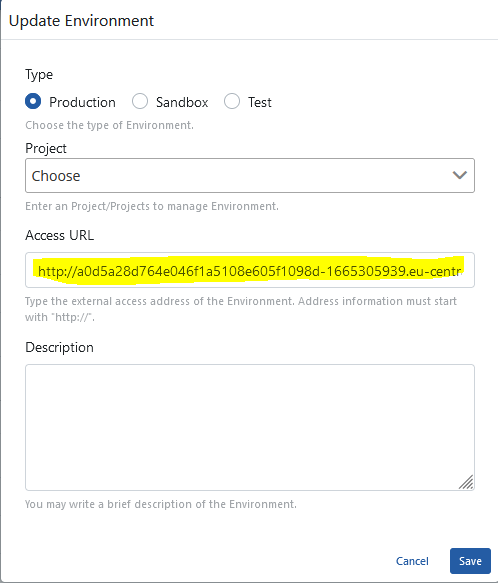

apinizer-worker-service LoadBalancer 10.100.203.201 a0d5a28d764e046f1a5108e60-1665305939.eu-central-1.elb.amazonaws.com 80:31447/TCP 54sThe AWS-generated LoadBalancer (EXTERNAL-IP) value must be set as the Access URL value of the defined Prod Environment.

First, the edit button in the Environment Info table of the page where the environment is clicked.

After that, the Access URL information of the environment is changed by clicking the edit button.

9. Publishing and Testing the First API in Apinizer

In the last step, it is explained how to open an API to Apinizer as an API Proxy through a sample API definition file.

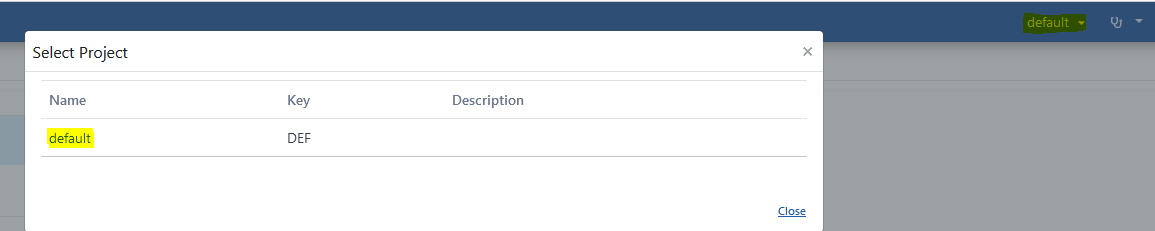

The project where API Proxy will be registered is selected from the navbar menu.

The project where API Proxy will be registered is selected from the navbar menu.

From the project menu in the Apinizer API Manager application, go to the Development → API Proxies page.

Click the Proxy button to define a new API Proxy.

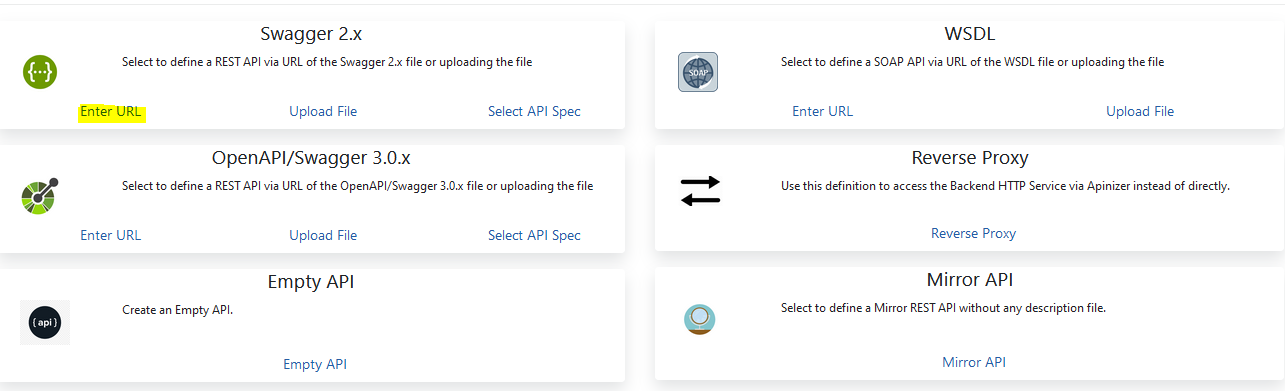

In the image below, the source from where the API Proxy will be created is selected with the selected link.

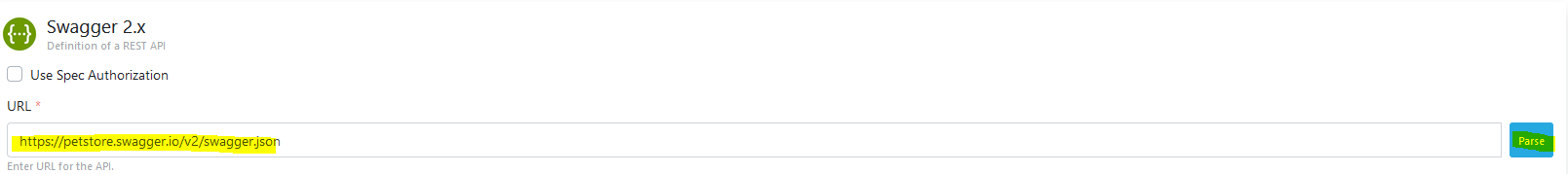

It is parsed by entering the link of the API Definition file.

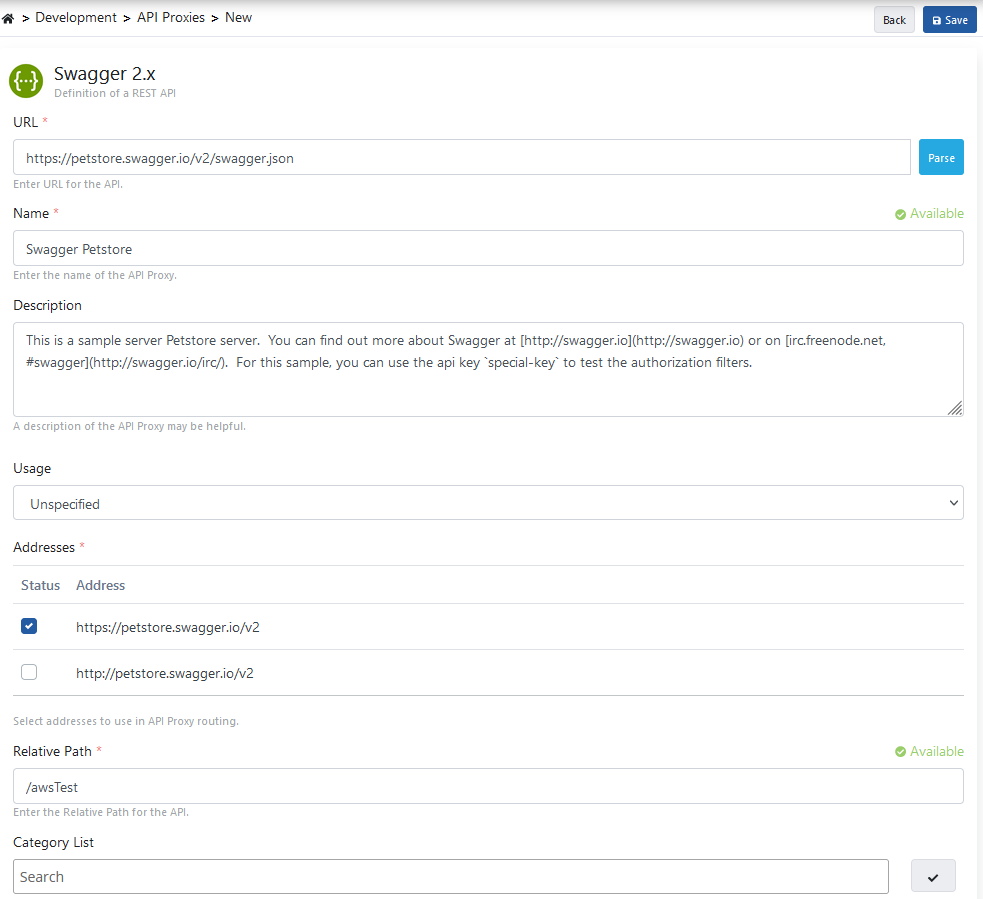

API Proxy information is entered and the Save button is clicked.

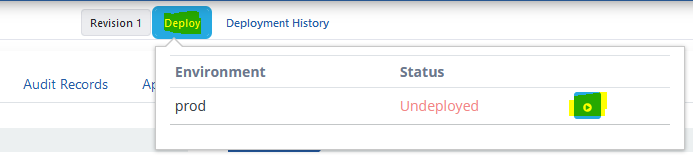

The Deploy button selected in the image below is used to deploy the API Proxy.

The deployment is confirmed.

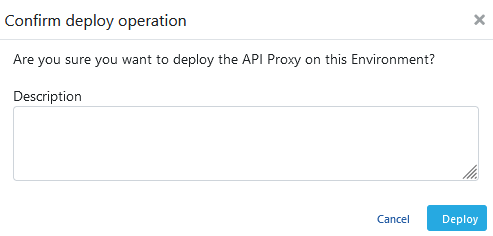

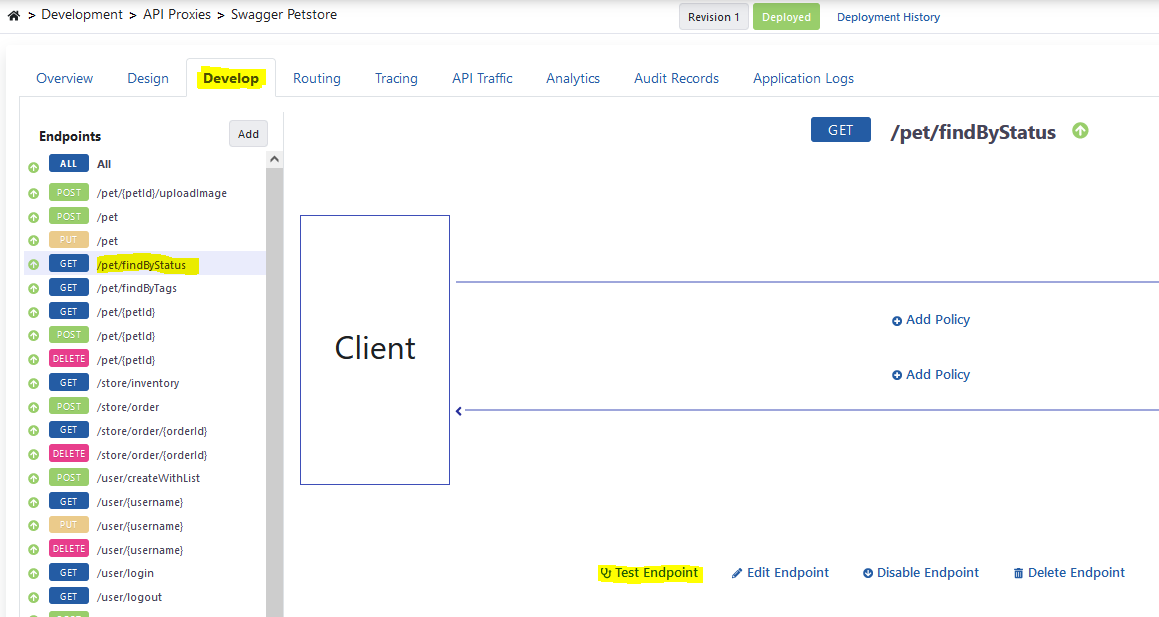

From the Develop tab of API Proxy, the Test Endpoint button on the endpoint to be tested is clicked.

The picture below shows the test dialog:

For detailed information about API Proxy, see the Developer Guide.

Click here for detailed information about the Test Console.